Slightly OT, but for these instructions that do pick range of bits, add, insert into range, does x86 have dedicated silicon in the ALU to implement this process or is it implemented in microcode? If it’s the latter then how can it be faster than the equivalent unrolled instructions on a RISC ISA?

This is more of a general question about how microcode can be faster than using separate instructions, which is something I have never quite understood. Any CPU engineers that can enlighten me?

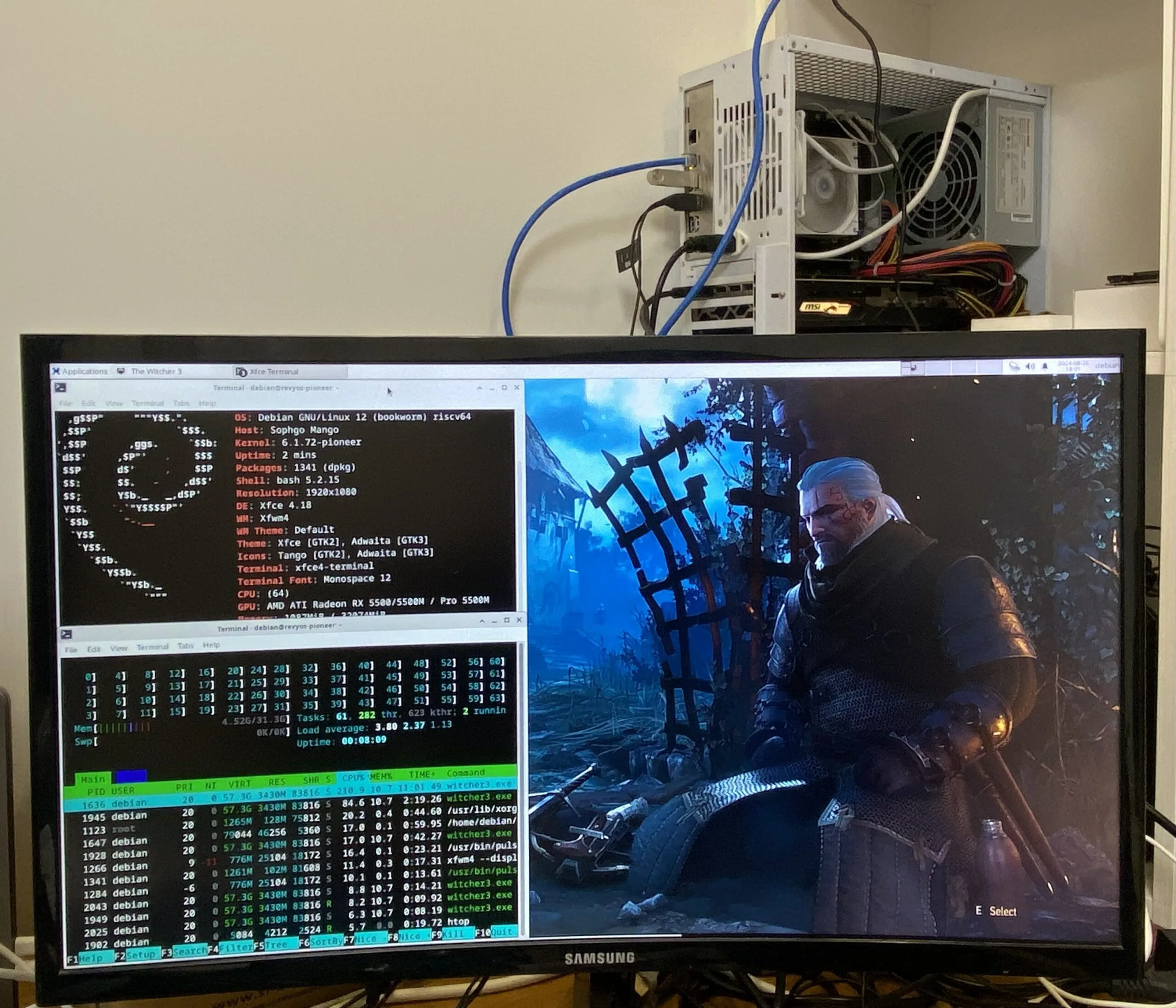

Not an expert, but what I understand is that x86 has microops, and each instruction is decomposed into microops by the microcode. These microops can then be executed many at the same time by the multiple “ALUs” (not strictly speaking ALUs).

Maybe someone else can correct me or expand a bit.

There appears to be dedicated silicon for e.g.

ADD AH, BL, see uops.info showing it having 1 uop across multiple microarchitectures (e.g.1being notation that it takes one uop on any port between 0/1/5/6, i.e. theoretical throughput of 4 instrs/cycle; I think the displayed 0.4 is just an artifact of it only testing 3 different destination registers despite there being a dependency on it). The newer Alder Lake actually has less throughput, but still takes only one uop.

This is why ARM computing is really exciting, but won’t go well for ARM. Once it’s possible to get out from the x86 monopoly… we’re going right back to the architecture zoo.