So you can use this and take a performance hit or not use this and take a performance hit because Nvidia doesn’t put enough vtam in their cards

Great, soon you’ll be able to play a game in 720p upscaled to 4k with AI, at 60 fps with 144 fps of AI-interpolated frames, with AI-compressed textures. Surely this won’t look like garbage!

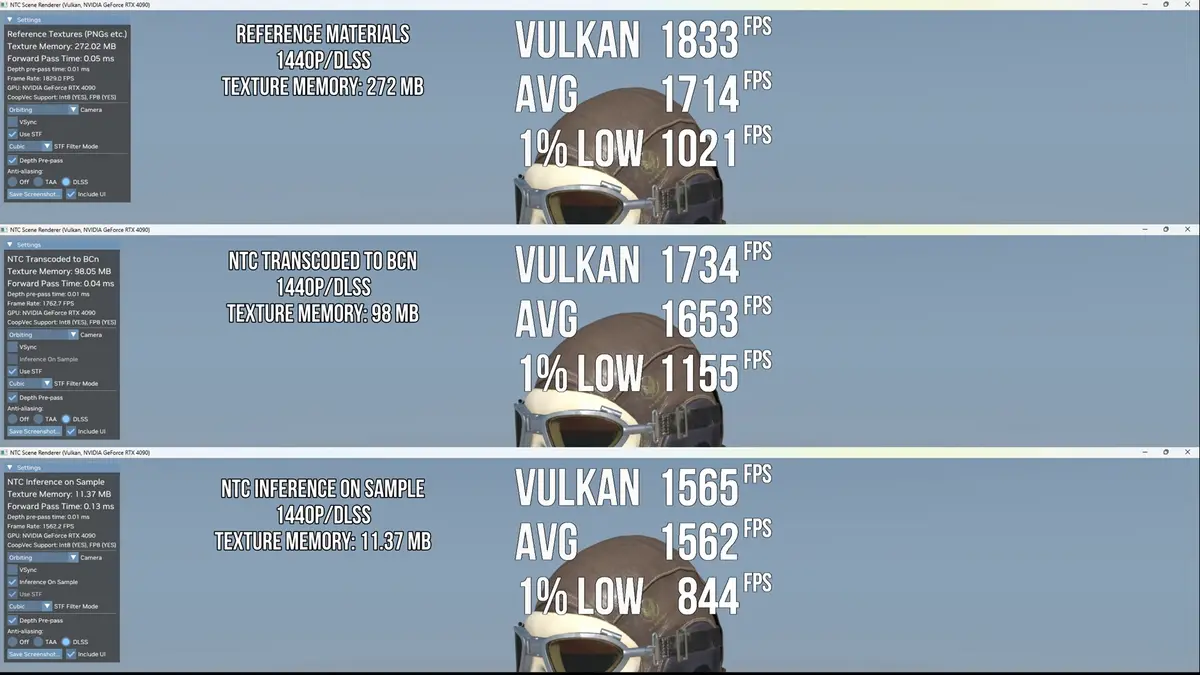

The performance cost is not insignificant.

And comparing it to uncompressed original pngs is disingenuous. No games are using uncompressed textures.

revealing a whopping 96% reduction in memory texture size with NTC compared to conventional texture compression techniques

From the article.

You’re correct. I guess they’re comparing against compressed pngs. Judging from the image at least.

But still, I think they’re cherry picking one of the worst formats to make the comparison look good.

Not to say that means this is bad. Just the cost/benefit is not nearly as good as it appears. We need comparisons against ETC or DXT.

Call of Duty be like

I would be interested in seeing performance impact in an actual game scenario. It’s hard to tell what the impact would be from a scene with just a single object in it and with FPS in the hundreds or thousands. Other bottlenecks could arise from an actual game scene that may make this negligible or a more significant impact on performance.

In case anyone was wondering why frame view is not open source…

And it’ll only be available on the RTX6000 series cards which will start out with 2gb of VRAM and cost $3000

This reminds me of the clever printer compression that would just completely replace numbers on documents