UPDATE: @[email protected] has responded

It is temporary as lemmy.world was cascading duplicates at us and the only way to keep the site up reliably was to temporarily drop them. We’re in the process of adding more hardware to increase RAM, CPU cores and disk space. Once that new hardware is in place we can try turning on the firehose. Until then, please patient.

ORIGINAL POST:

Starting sometime yesterday afternoon it looks like our instance started blocking lemmy.world: https://lemmy.sdf.org/instances

This is kind of a big deal, because 1/3rd of all active users originate there!

Was this decision intentional? If so, could we get some clarification about it? @[email protected]

It is temporary as lemmy.world was cascading duplicates at us and the only way to keep the site up reliably was to temporarily drop them. We’re in the process of adding more hardware to increase RAM, CPU cores and disk space. Once that new hardware is in place we can try turning on the firehose. Until then, please patient.

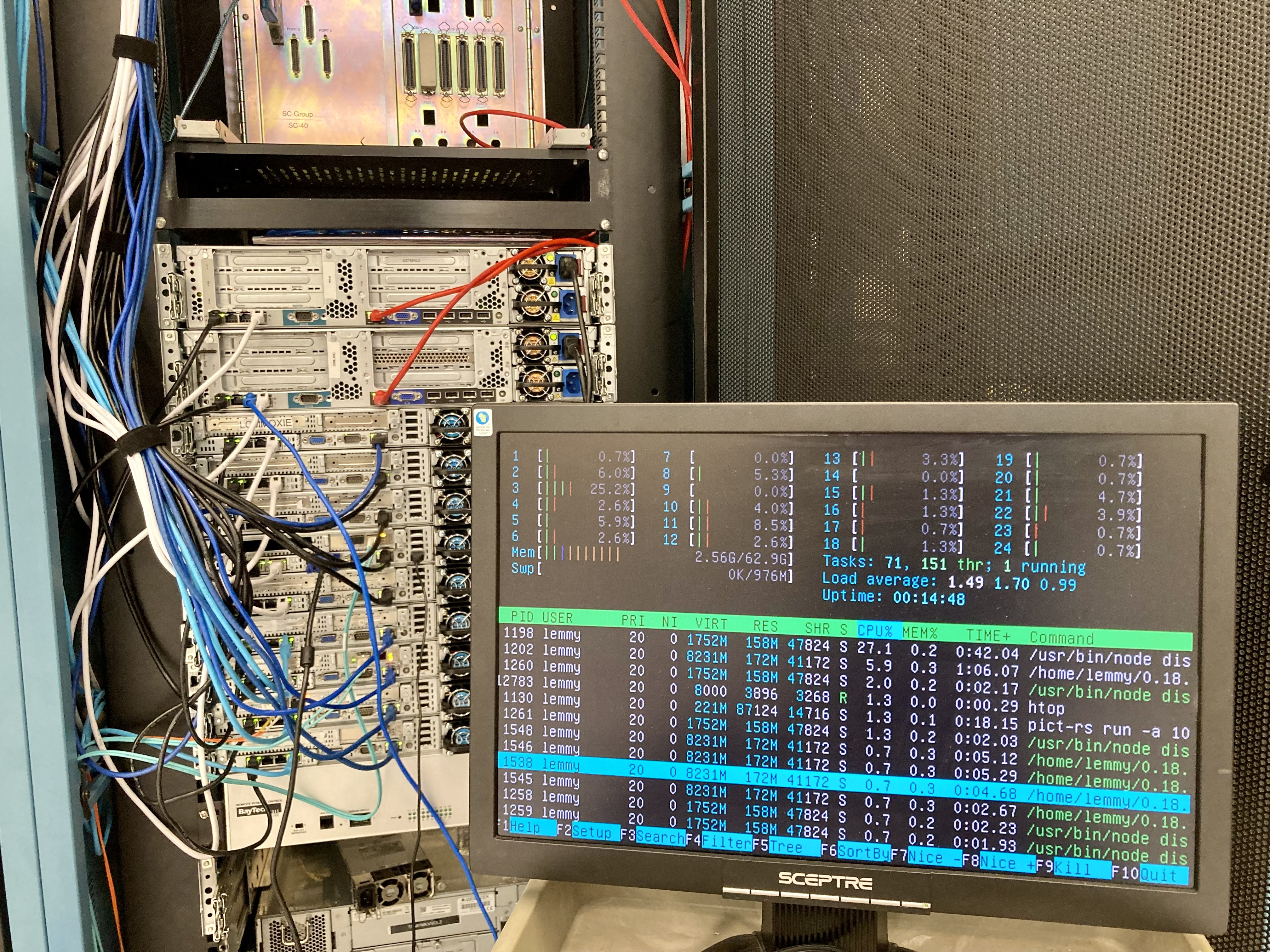

live updates in progress. Moved to SSDs, added more cores and 128GB and 64GB ram.

If you’re wondering how to donate, like I was-

Or if you want a button to donate: https://sdf.org/support/

Donated! SDF is what the future of the internet should look like! I love you peeps so much! ♥

And yet it was the 90’s aesthetic of the website that instantly made me sign up. 🤪 It made me so nostalgic.

Edit: Will also be donating ASAP!

This is a good thing. We don’t want another mastodon.social situation.

What happened with that? I’m new.

Hi new, I’m dad.

Honestly, this low effort comment is just to say your username cracked me up.

Here is where we’re at now.

- increased cores and memory, hopefully we never touch swap again

- dedicated server for pict-rs with its own RAID

- dedicated server for lemmy, postgresql with its own RAID

- lemmy-ui and nginx run on both to handle ui requests

Thank you for everyone who stuck around and helped out, it is appreciated. We’re working on additional suggested tweaks from the Lemmy community and hope to let lemmy.world try to DoS us again soon. Hopefully we’ll do much better this time.

Killer stuff! Sorry for my contributing undue pressure on top of what was probably already a taxing procedure happening in the server room.

Out of curiousity: how do you feel about Lemmy performance so far? I’m actually a little bit surprised that we already managed to outstrip the prior configuration. I suppose that inter-instance ActivityPub traffic just punches really hard regardless of intra-instance activity?

What can we (ordinary users) do to help? I’m in Europe, would it be better if I used the SDFeu server as my home instance?

Yes, the fediverse wants to be decentralized, so it is encouraged to use what works best for you. lemmy.sdfeu.org is located in Düsseldorf, Germany.

If this is true I will be making another account and moving to a different server. The only reason I joined this one was that it doesn’t seem to block/defed.

You’re absolutely welcome to do that as it is your decision and you have many choices. We hope to build a community of folks that would like to help the fediverse grow and support smaller instances. Similar growing pains were seen during the twitter exodous last September.

Two things that would be great:

- Have a tanoy/horn announce icon at the top like with Mastodon where status information can be posted.

- Change the heart icon to link to a method that supports the local instance

Attempts were made to create a thread for the almost daily upgrades we’re going through with BE and UI changes, but even with pinning it doesn’t have the visibility.

We’re on site in about 1 hour to install a new RAID and once that is completed we’ll finish the transfer of pict-rs data.

deleted by creator

You’re all doing an amazing job!

I became an ARPA member this past week and am happy to donate again to chip in for this hardware upgrade.

Edit: donated at the $48 yearly level.

Just donated to do my part to help keep the lights on. Thanks y’all for doing a great job, I am glad I found SDF.