- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

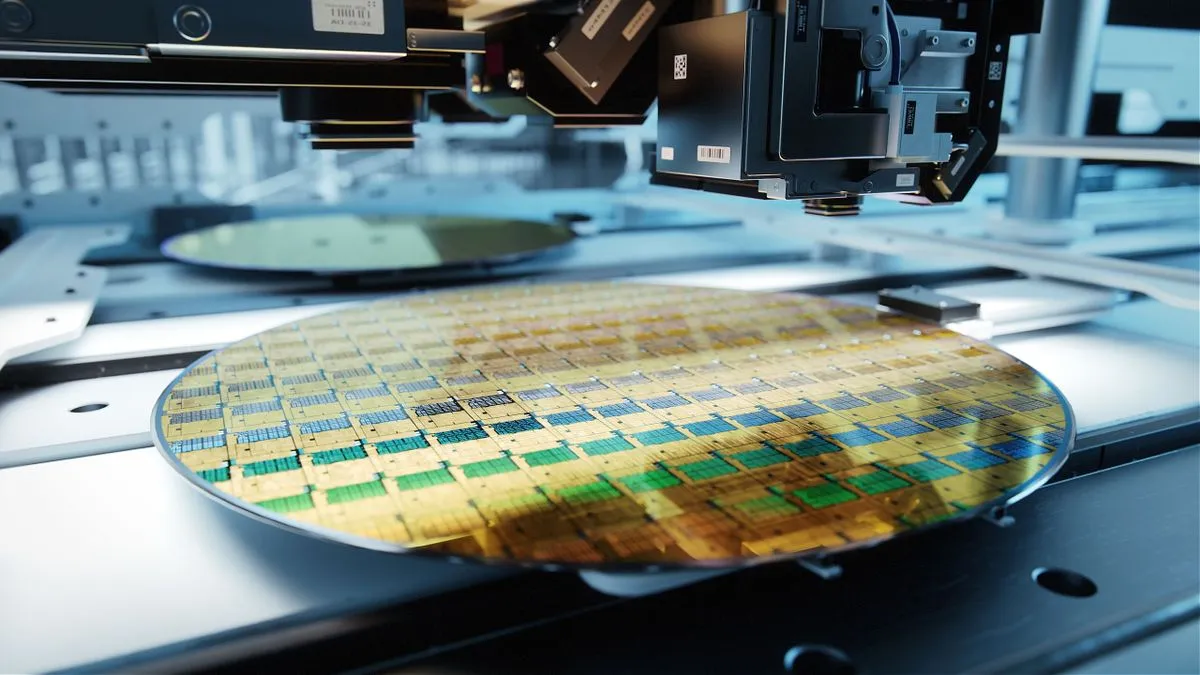

Firm predicts it will cost $28 billion to build a 2nm fab and $30,000 per wafer, a 50 percent increase in chipmaking costs as complexity rises::As wafer fab tools are getting more expensive, so do fabs and, ultimately, chips. A new report claims that

Or, and hear me out, we could just write less shitty software…

You’re right. This is the biggest issue facing computing currently.

The ratio of people who are capable of writing less-shitty software to the number of things we want to do with software ensures this problem will not get solved anytime soon.

Eh I disagree. Every software engineer I’ve ever worked with knows how to make some optimizations to their code bases. But it’s literally never prioritized by the business. I suspect this will shift as IaaS takes over and it’s a lot easier to generate the necessary graphs showing the stability of your product being maintained while the consumed resources has been reduced.

But what if i want to do all my work inside a JavaScript “application” inside a web browser inside a desktop?

(We really do have do much CPU power these days that we’re inventing new ways to waste it…)

But where’s the fun in that?

As long as humans have some hand in writing and designing software we’ll always have shitty software.

While I agree with the cynical view of humans and shortcuts, I think it’s actually the “automated” part of the process to blame. If you develop an app, there’s only so much you can code. However if you start with a framework, now you’ve automated part of your job for huge efficiency gains, but you’re also starting off with a much bigger app and likely lots of functionality you aren’t really using

I was more getting at with software development it’s never just the developers making all of the decisions. There are always stakeholders who often force time and attention to other things and make unrealistic deadlines, while most software developers I know would love to be able to take the time to do everything the right way first.

I also agree with the example you provided. Back when I used to work on more personal projects I loved it when I found a good minimal framework that allowed you to expand it as needed so you rarely ever had unused bloat.

If you’re not using the functionality it’s probably not significantly contributing to the required CPU/GPU cycles. Though I would welcome a counter example.