- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

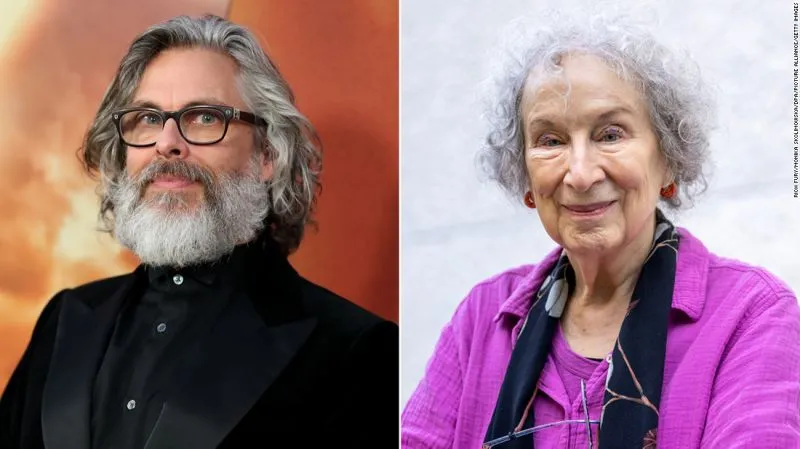

Thousands of authors demand payment from AI companies for use of copyrighted works::Thousands of published authors are requesting payment from tech companies for the use of their copyrighted works in training artificial intelligence tools, marking the latest intellectual property critique to target AI development.

Can you elaborate on this concept of a LLM “plagiarizing”? What do you mean when you say that?

What I mean is that it is a statistical model used to generate things by combining parts of extant works. Everything that it “creates” is a piece of something that already exists, often without the author’s consent. Just because it is done at a massive scale doesn’t make it less so. It’s basically just a tracer.

Not saying that the tech isn’t amazing or likely a component of future AI but, it’s really just being used commercially to rip people off and worsen the human condition for profit.

This describes all art. Nothing is created in a vacuum.

No, it really doesn’t, nor does it function like human cognition. Take this example:

I, personally, to decide that I wanted to make a sci-fi show. I don’t want to come up with ideas so, I want to try to do something that works. I take the scripts of every Star Trek: The Search for Spock, Alien, and Earth Girls Are Easy and feed them into a database, seperating words into individual data entries with some grammatical classification. Then, using this database, I generate a script, averaging the length of the films, with every word based upon its occurrence in the films or randomized, if it’s a tie. I go straight into production with “Star Alien: The Girls Are Spock”. I am immediately sued by Disney, Lionsgate, and Paramount for trademark and copyright infringement, even though I basically just used a small LLM.

You are right that nothing is created in a vacuum. However, plagiarism is still plagiarism, even if it is using a technically sophisticated LLM plagiarism engine.

ChatGPT doesn’t have direct access to the material it’s trained on. Go ask it to quote a book to you.

That really doesn’t make an appreciable difference. It doesn’t need direct access to source data, if it’s already been transferred into statistical data.

It does rule out “plagiarism”, however, since it means it can’t pull directly from any training material.

I should have asked earlier: what do you think plagiarism is?

It really doesn’t. The data is just tokenized and encoded into the model (with additional metadata).

If I take the following:

Three blind mice, three blind mice See how they run, see how they run

And encode it based upon frequency:

1:{"word": "three", "qty": 2}2:{"word": "blind", "qty": 2}3:{"word": "mice", "qty": 2}4:{"word": "see", "qty": 2}5:{"word": "how", "qty": 2}6:{"word": "they", "qty": 2}7:{"word": "run", "qty": 2}The original data is still present, just not in its original form. If I were then to use the data to generate a rhyme and claim authorship, I would both be lying and committing plagiarism, which is the act of attempting to pass someone else’s work off as your own.

Out of curiosity, do you currently or intend to make money using LLMs? I ask because I’m wondering if this is an example of Upton Sinclair’s statement “It is difficult to get a man to understand something when his salary depends on his not understanding it.”

That’s not how LLMs work, and no, I have no financial skin in the game. My field is software QA; I can’t nail down whether it would affect me or not, because I could imagine it going either way. I do know what it doesn’t matter-- legislation is not going to stop this-- it’s not even going to do much to slow it down.

What about you? I find that most the hysteria around LLMs comes from people whose jobs are on the line. Does that accurately describe you?