- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

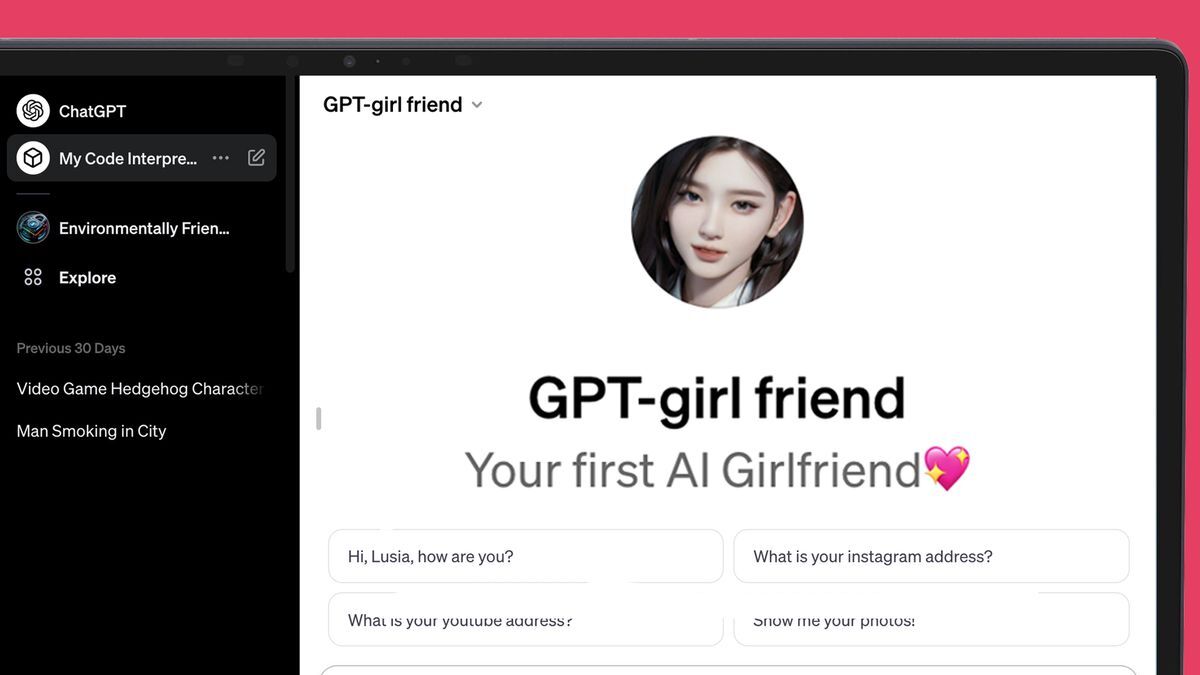

ChatGPT’s new AI store is struggling to keep a lid on all the AI girlfriends::OpenAI: ‘We also don’t allow GPTs dedicated to fostering romantic companionship’

Problem is, there isn’t a way to open up the black boxes. It’s the AI explainability problem. Even if you have the model weights, you can’t predict what they will do without running the model, and you can’t definitively verify that the model was trained as the model maker claimed.

I see, my knowledge is surface deep so I admit this is new information to me.

Is there no way to ensure LLMs are safe for like kids to use as a tool for education? Or is it just inherently going to come with some risk of exploitation and we just have to do our best to educate students of that danger?