Yeah trap music is a good example shit was everywhere for a while.

Indie sleaze is another.

Mumblecore movies.

“Prestige TV” literally ongoing.

Yeah trap music is a good example shit was everywhere for a while.

Indie sleaze is another.

Mumblecore movies.

“Prestige TV” literally ongoing.

I agree that anti-intellectualism is bad, but I wouldn’t necessarily consider being AI negative by default, a form of anti-intellectualism. It’s the same thing as people who are negative on space exploration. It’s a symptom where it seems that there is infinite money for things that are fad/scams/bets, things that have limited practical use in people’s lives, and ultimately not enough to support people.

That’s really where I see those arguments coming from. AI is quite honestly a frivolity in a society where housing is a luxury.

I think the need to have a shared monoculture is a deeply reactionary way of thinking that prevents us from developing human empathy. You don’t need to say “Bazinga” at the same time as another person in order for you to relate to, care for, and understand strangers. I think the yearning for monoculture in people 25-40 is a mirror of boomers who complain that they cannot relate to kids anymore because nobody really believes in the pledge of alligence or some such other “things r different” nonsense. Yeah I haven’t played Hoop and Stick 3, we don’t need to play the same video games to relate to each other.

It’s a crutch for a brutal culture where you are too scared to show a modicum of openness or vulnerability with other humans because deep down you need to be reassured that they won’t scam/harm you simply because they believe in the magic words of Burgerstan. People are uncomfortable with change and things they don’t know because we’ve built a society where change often begets material vulnerability in people, and information and even cultural media have become a weapon to be used against others.

Monoculture was never good, it simply was. Also despite this being a real aesthetic trend, you should also remember that the vast majority of consumer technology produced at the same time was not clear plastic tech. If anything the monoculture of tech products of that era was that gross beige that yellows in about a year or two. It’s just not aesthetic enough to remember, and in 10 years everything just defaulted black. I’ve actually never seen a clear plastic Dreamcast/ Dreamcast controller IRL. I’ve been a tech guy forever and despite knowing about it, I only know of 1 person that had actually experienced the Dreamcast internet. This is very much nostalgia bait vs actual how things were.

To put it into perspective for one of those phones with clear plastic, there were 10,000 of these

I should have been more precise, but this is all in the context of news about a cutting-edge LLM using a fraction of the cost of ChatGPT, and comments calling it all “reactionary autocorrect” and “literally reactionary by design”.

I disagree that it’s “reactionary by design”. I agree that it’s usage is 90% reactionary. Many companies are effectively trying to use it in a way that attempts to reinforce their deteriorating status quo. I work in software so I always see people calling this shit a magic wand to problems of the falling rate of profit and the falling rate of production. I’ll give you an extrememly common example that i’ve seen across multiple companies an industries.

Problem: Modern companies do not want to be responsible for the development and education of their employees. They do not want to pay for the development of well functioning specialized tools for the problems their company faces. They see it as a money and time sink. This often presents itself as:

I’ve seen the following be pitched as AI Bandaids:

Proposal: push all your documentation into a RAG LLM so that users simply ask the robot and get what they want

Reality: The robot hallucinates things that aren’t there in technical processes. Attempts to get the robot to correct this involves the robot sticking to marketing style vagaries that aren’t even grounded in the reality of how the company actually works (things as simple as the robot assuming how a process/team/division is organized rather than the reality). Attempts to simply use it as a semantic search index end up linking to the real documentation which is garbage to begin with and doesn’t actually solve anyone’s real problems.

Proposal: We have too many meetings and spend ~4 hours on zoom. Nobody remembers what happens in the meetings, nobody takes notes, it’s almost like we didn’t have them at all. We are simply not good at working meetings and it’s just chat sessions where the topic is the project. We should use AI features to do AI summaries of our meetings.

Reality: The AI summaries cannot capture action items correctly if at all. The AI summaries are vague and mainly result in metadata rather than notes of important decisions and plans. We are still in meetings for 4 hours a day, but now we just copypasta useless AI summaries all over the place.

Don’t even get me started on CoPilot and code generation garbage. Or making “developers productive”. It all boils down to a million monkey problem.

These are very common scenarios that I’ve seen that ground the use of this technology in inherently reactionary patterns of social reproduction. By the way I do think DeepSeek and Duobao are an extremely important and necessary step because it destroys the status quo of Western AI development. AI in the West is made to be inefficient on purpose because it limits competition. The fact that you cannot run models locally due to their incredible size and compute demand is a vendor lock-in feature that ensures monetization channels for Western companies. The PayGo model bootstraps itself.

The problem that symbolic AI systems ran into in the 70s are precisely the ones that deep neural networks address.

Not in any meaningful way. A statistical model cannot address the Frame problem. Statistical models themselves exacerbate the problems of connectionist approaches. I think AI researchers aren’t being honest with the causality here. We are simply fooling ourselves and willfully misinterpreting statistical correlation as causality.

You’re right there are challenges, but there’s absolutely no reason to think they’re insurmountable.

Let me repeat myself for clarity. We do not have a valid general theory of mind. That means we do not have a valid explanation of the process of thinking itself. That is an insurmountable problem that isn’t going to be fixed by technology itself because technology cannot explain things, technology is constructed processes. We can use technology to attempt to build a theory of mind, but we’re building the plane while we’re flying it here.

I’d argue that using symbolic logic to come up with solutions is very much what reasoning is actually.

Because you are a human doing it, you are not a machine that has been programmed. That is the difference. There is no algorithm that gives you correct reasoning every time. In fact using pure reasoning often leads to lulzy and practically incorrect ideas.

Somehow you have to take data from the senses and make sense of it. If you’re claiming this is garbage in garbage out process, then the same would apply to human reasoning as well.

It does. Ben Shapiro is a perfect example. Any debate guy is. They’re really good at reasoning and not much else. Like read the Curtis Yarvin interview in the NYT. You’ll see he’s really good at reasoning, so good that he accidentally makes some good points and owns the NYT at times. But more often than not the reasoning ends up in a horrifying place that isn’t actually novel or unique simply a rehash of previous horriyfing things in new wrappers.

The models can create internal representations of the real world through reinforcement learning in the exact same way that humans do. We build up our internal world model through our interaction with environment, and the same process is already being applied in robotics today.

This is a really Western brained idea of how our biology works, because as complex systems we work on inscrutable ranges. For example lets take some abstract “features” of the human experience and understand how they apply to robots:

Strength. We cannot build a robot that can get stronger over time. Humans can do this, but we would never build a robot to do this. We see this as inefficient and difficult. This is a unique biological aspect of the human experience that allows us to reason about the physical world.

Pain. We would not build a robot that experiences pain in the same way as humans. You can classify pain inputs. But why would you build a machine that can “understand” pain. Where pain interrupts its processes? This is again another unique aspect of human biology that allows us to reason about the physical world.

drug discovery

This is mainly hype. The process of creating AI has been useful for drug discovery, LLMs as people practically know them (e.g. ChatGBT) have not other than the same kind of sloppy labor corner cost cutting bullshit.

If you read a lot of the practical applications in the papers it’s mostly publish or perish crap where they’re gushing about how drug trials should be like going to cvs.com where you get a robot and you can ask it to explain something to you and it spits out the same thing reworded 4-5 times.

They’re simply pushing consent protocols onto robots rather than nurses, which TBH should be an ethical violation.

Neurosymbolic AI is overhyped. It’s just bolting on LLMs to symbolic AI and pretending that it’s a “brand new thing” (it’s not, it’s actually how most LLMs practically work today and have been for a long time GPT-3 itself is neurosymbolic). The advocates of approach pretend that the “reasoning” comes from symbolic AI which is known as classical AI, which still suffers from the same exact problems that it did in the 1970’s when the first AI winter happened. Because we do not have an algorithm capable of representing the theory of mind, nor do we have a realistic theory of mind to begin with.

Not only that but all of the integration points between classical techniques and statistical techniques present extreme challenges because in practice the symbolic portion essentially trusts the output of the statistical portion because the symbolic portion has limited ability to validate.

Yeah you can teach ChatGPT to correctly count the r’s in strawberry with a neurosymbolic approach but general models won’t be able to reasonably discover even the most basic of concepts such as volume displacement by themselves.

You’re essentially back at the same problem where you either lean on the symbolic aspects and limit yourself entirely to advanced ELIZA like functionality that can just use classifier or your throw yourself to the mercy of the statistical model and pray you have enough symbolic safeguards.

Either way it’s not reasoning, it is at best programming – if that. That’s actually the practical reason why the neurosymbolic space is getting attention because the problem has effectively been to be able to control inputs and outputs for the purposes of not only reliability / accuracy but censorship and control. This is still a Garbage In Garbage Out process.

FYI most of the big names in the “Neurosymbolic AI as the next big thing” space hitched their wagon to Khaneman’s Thinking Fast and Slow bullshit that is effectively made up bullshit like Freudianism but lamer and has essentially been squad wiped by the replication crisis.

Don’t get me wrong DeepSeek and Duobau are steps in the right direction. They’re less proprietary, less wasteful, and broadly more useful, but they aren’t a breakthrough in anything but capitalist hoarding of technological capacity.

The reason AI is not useful in most circumstance is because of the underlying problems of the real world and you can’t algorithm your way out of people problems.

Dawn of War 1. Better yet just computerize table top. I’m antisocial :(

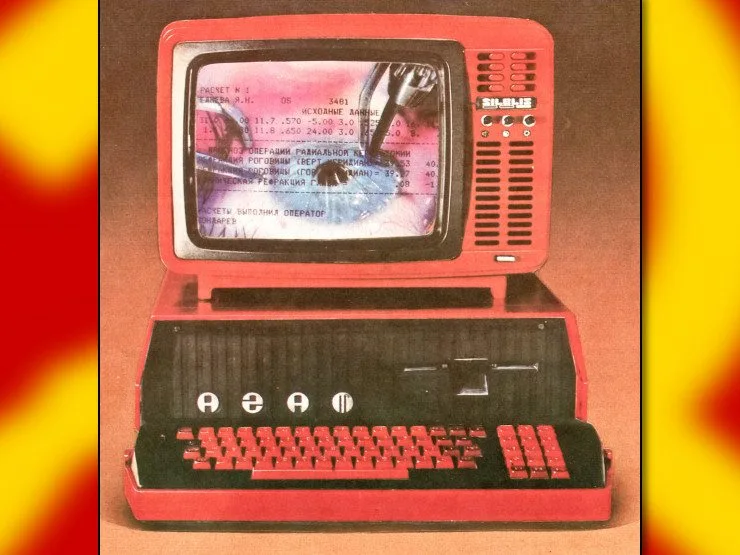

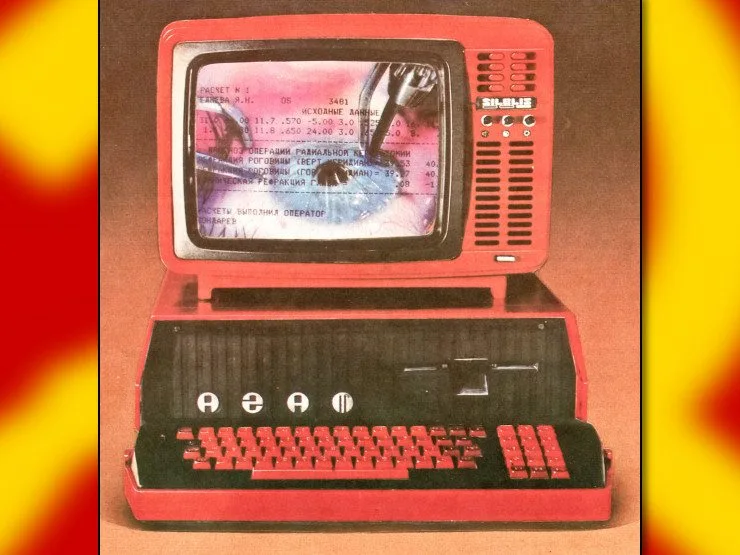

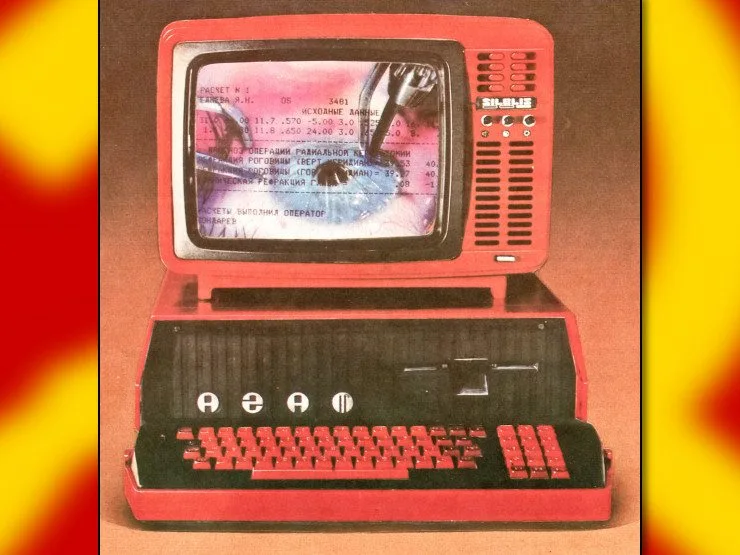

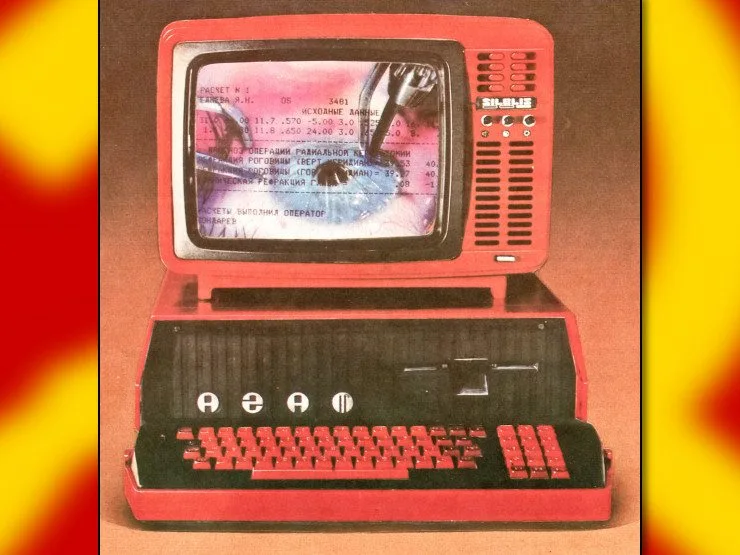

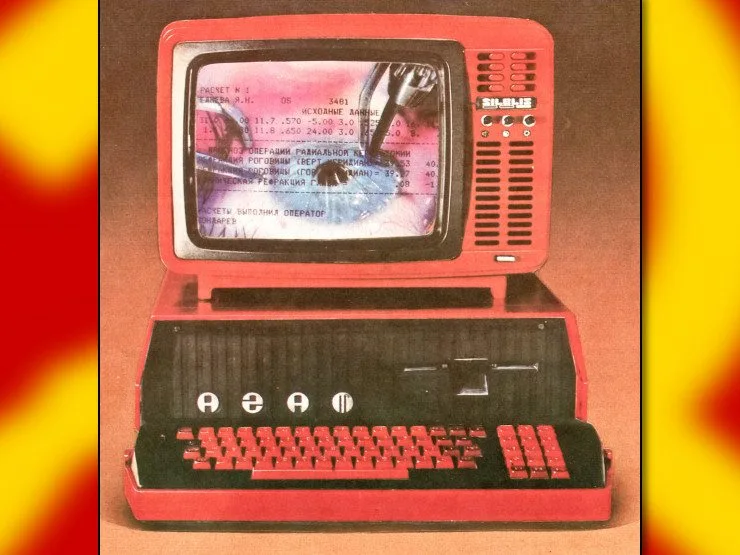

I was a really young nerdy kid, and coming from the Soviet Union like the only thing I cared about was computer. I was obsessed with computer since playing Doom as a kid in a cyber cafe. I got my first computer at the age of 8-9 after we had immigrated. I was about 10 years old when I was trolling AOL chat rooms by myself… and I had a lovely 640x480 web cam… and yeah. A lot of this brings up uneasy memories.

I think the horny categorization does fit me. I’m not like a gooner or anything but my partner would agree 100% with the statement: “thinks I’m about to pounce on them and always waits for me to initiate everything. Why people basically see horny as one of my personality traits.”

I don’t experience issues with non-sexual intimacy, but I wanted to let you know that you’re not alone!

General Strikes are escalated to not planned. That’s why the AFL the most idiotic union basically banned escalations into a general strike by requiring striking locals to have national authorization or risk getting kicked out of the union. This was in response to the Seattle General Strike which happened in Feb 1919 and the AFL amended the constition in June 1919. Similary Taft-Hartley which outlaws general strikes in the US was passed in 1947 was a response to the Oakland General Strike of 1946.

Also lol at #3 what is this? 2012?

The reason I disagree is that “dog/cat food” implies it’s something that is widely eaten culturally, a “default meal” of sorts. I don’t think pate fits the bill there most Americans cannot handle offal. Nuggies sure, but not really pate.

he believes it would actually change things if the facts came out and it was actually the CIA behind it

Ah yes the classic “force the vote” argument.

I think that criteria is a bit too loose. In the American context liver pate would qualify.

Should be fine. Taste may be every so slightly affected if you don’t wash them. They’re not really being cooked, and the smoke isn’t concentrated enough at that distance to “cold smoke” them. In a perverse way they might actually come out better because smoke carries potassium which is part and parcel of plant nutrition.

In reality given the last several years of crazy North American wildfires almost everyone has eaten produce that was affected by wild fire smoke in this way.

if time travelers kidnapped a few thousand scientists, engineers, and so forth from the present and took them back thousands of years and dropped them naked in the post-glacial northern european steppes, … they certainly aren’t building a chip manufacturing plant to start making computers.

You can blame British adventure novels for this bullshit idea. Every other day a Kickstarter of “THE END OF THE WORLD BOOK WHERE YOU CAN BUILD COMPUTER FROM SAND AFTER NUCLEAR BOMB” gets launched.

the apparent contrast of “low-tech” and “high-tech” is only going to feel like a contradiction to people (like me) who have internalized liberal economic theory based on the notion that value is ultimately created by the very smartest of fail-children having the very best ideas.

As an immigrant form the former Soviet Union, it’s not liberal economic theory notions. Plenty of Post Soviet Liberal morons out there with the same notions. It’s living in a rich country where you are isolated from the practicalities of the systems that shape your life.

This Archer clip on the origin of meat (before it becomes dictatorial lulziness) sums up the distinction nicely:

https://youtu.be/JHMJxFICUjk?t=57

One of the things that most Soviet Intelligentsia complained about was the fact that as students they had to work on Kolhozes (communal farms) during college summer break. But because of that everyone in the Soviet Union effectively had an intimate and direct relationship with their food supply chain. At one point (when all forms of back filling fail e.g. trade, stockpiling, etc), someone has to pick the food, fire or no fire. Otherwise nobody eats. The practical problem here is how to make that as fair and as safe as possible. This is why communist theory is the theory of misery.

The main thing to understand about ALL modern monetary theories is that all monetary systems practically borrow from the future based on the idea that initial investments into a technology/product/service are the most expensive and future developments lower the cost of production.

This idea is patently false, because it assumes that the economic inputs into those production systems themselves are:

Systems built on the Labor Theory of Value also typical fall into this trap as the underlying labor cost changes due to technological advanced tended to decrease in the last several centuries. The practical issue here is that most monetary systems and theories are built from small example bases of limited experiences (especially in the post WW2 West) and then supposed to work in the abstract. Having a theory of value is not actually important in a capitalist society. Having a theory of value is actually detrimental to the economics of a capitalist society because it binds arbitrage, and it prevents capitalist societies from uneven extraction and development (also not inherently a bad thing). Most argumentation against theories of value doesn’t come from a scientific place to be honest. Speculative value isn’t inherently wrong or bad either it just has different side effects. Labor theory says that changes in labor cost change the value of a commodity, speculative theory says that changes in attitudes or needs for a commodity change its value. Labor theory of value cannot fully encompass waste, and when it creates waste it creates waste because of the attitudes/needs of the people in the economy (e.g. we make expensive widgets no one buys because we need jobs – remember the Soviet Union needed to create make work jobs because unemployment was illegal and just giving people money did not sit well with societal values the way they were. Arguably state capitalist societies would do better with speculative value and just giving people money and letting them decide how to spend their time – given good communal opportunities – rather than creating make work jobs. The issue is that labor theory of value requires the bootstrap of a developed industrial economy, every single person’s basic needs met, a socialist society that isn’t driven through individual envy, and voluntary vocational system to work properly without falling into contradiction. That’s a tall order, the social aspects of which, need generations to be built without resorting to punitive legalism.).

MMT itself falls into the above traps, but I think the interpretation here is incorrect. MMT is not money printer go brrr. MMT says that money printer is going brr and the government refuses to change who can access the print tray. The vast majority of money in the US is created not by the federal government but by the constituent banks of the federal reserve. This is quite literally the apex definition of “private capital” a group of unelected, unaccountable organizations headed by defacto oligarchs that effectively can decide for what purpose society creates money. MMT in practice has the same guard rails and pitfalls as the existing system. MMT simply says because monetary creation is happening in the private sector the government is de facto backing that creation through various programs, new legislation, and other assurances. In essence the government is creating this money by proxy anyway and it should take an active role in money creation for the purposes of social benefit.

I think the biggest criticism of MMT is that it cannot work in an under developed country, and arguably it cannot work outside the specific parameters of the United States and the petro dollar, that is absolutely correct.

MMT is the iteration of using “tricks of circulation” to save Capitalism, similar to views espoused by Proudhon which Marx critiqued in Capital and the Gundrisse.

This also absolutely correct but it is not a bad thing. The reality to “building” socialism is that societies must keep advancing, MMT despite being riddled with contradictions, overly specific to the US empire, and ultimately a rhetorical accounting trick does seek to create an evolution of spending on public good rather than a devolution of the US monetary system where both the federal government and the constituent banks of the fed are simply being exchanges where public money is funneled into private coffers.

I think it’s really important to separate the theoretical complains from the practical purposes here. In order to align systems without destroying them you have to make changes in steps. For a Marxist perspective, MMT is simply a step. So ultimately from a Marxist perspective what is the use of MMT?

If you boil it down all Marxist critiques of MMT are “it’s not communism”, which is true on its face. Ultimately neither was any state capitalism under Soviet like systems and neither is the current Chinese economic system. We can argue about the “intent” of various MMT theorists if we want to put this discussion into a China-style frame (e.g. we intend to build communism post capitalist development), but that’s putting the cart before the horse.

Haha yeah. I remember the first one where it went from flat to skeuomorphic, that was OS/2 and System 6 to Windows 3 and System 7.

I just got a little fixated on the Dreamcast being representative of monoculture.