They terk our hertz.

Dey terk er hertz.

Trk r hrtz

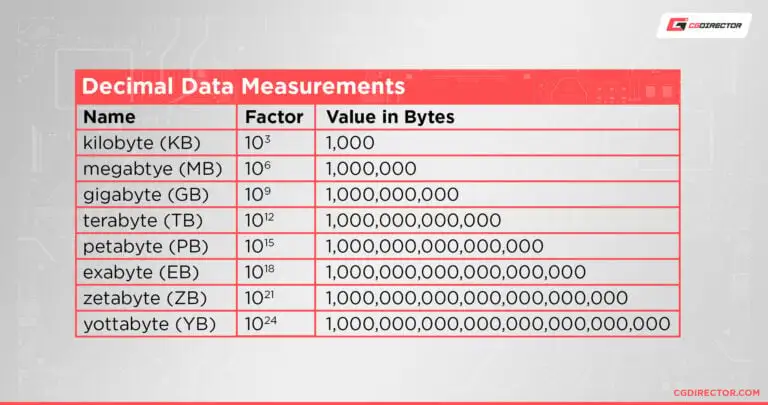

Wait until they find out how much space is on their 2TB SSD…

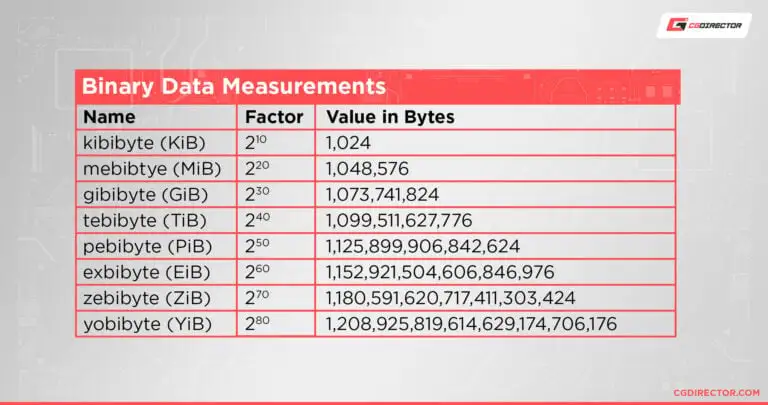

That difference is because the drive manufacturer advertises capacity in Terabytes (or Gigabytes) and the operating system displays drive capacity in Tebibytes (or Gibibytes). The former unit is based on 1000 and the latter unit is based on 1024, which lets the drive manufacturer put a bigger number on the product info while technically telling the truth. The drive does have the full advertised capacity.

Hz are Hz though

Hz are Hz though

8 Hitz = 1 Hert

I am honestly unsure if he’s joking about the imaginary kilobyte. Quantum computing is freaky.

the drive manufacturer advertises capacity in Terabytes (or Gigabytes) and the operating system displays drive capacity in Tebibytes (or Gibibytes). The former unit is based on 1000 and the latter unit is based on 1024, which lets the drive manufacturer put a bigger number on the product info while technically telling the truth.

I understand why storage manufacturer do this and I think it makes sense. There is no natural law that dictates the physical medium to be in multiples of 2^10. In fact it is also the agreed upon way to tell clock speeds. Nobody complains that their 3 GHz processor only has 2.X GiHz. So why is it a problem with storage?

Btw, if you want to see a really stupid way to “use” these units, check the 3.5" floppy capacity:

The double-sided, high-density 1.44 MB (actually 1440 KiB = 1.41 MiB or 1.47 MB) disk drive

So they used 2^10 x 10^3 as the meaning of “M”, mixing them within one number. And it’s not like this was something off in a manual or something, this was the official capacity.

so why is it a problem with storage?

Because it gets worse with size. 1 KB is just 2.4% less than 1 KiB. But 1 TB is almost 10% less than 1 TiB. So your 12 TB archive drive is less like 11.9 TiB and more like 11 TiB. An entire “terabyte” less.

Btw ISPs do the same.

Data transfers have always been in base 10. And disc manufacturers are actually right. If anything, it’s probably Microsoft that has popularised the wrong way of counting data.

It has nothing to do with wanting to make disks be bigger or whatever.

Why is it the wrong way when its the way the underlying storage works?

Underlying storage doesn’t actually care about being in powers of 2^10 or anything, it’s only the controllers that do, but not the storage medium. You’re mixing up the different possibilities to fill your storage with (which is 2^(number of bits)).

Looking at triple layer cell SSDs, how would you ever reach a 2^10, a 2^20 or 2^30 capacity when each physical cell represents three bits? You could only do multiples of three. So you can do three gibibytes, but that’s just as arbitrary as any other configuration.

It’s just the agreed metric for all capacities except for RAM. Your Gigabit network card also doesn’t transfer a full Gibibit (or 125 Mebibytes) in a single second. Yet nobody complains. Because it’s only the operating system manufacturer that thinks his product needs AI that keeps using the prefix wrongly (or at least did, I’m not up to speed). Everyone else either uses SI units (Apple) or correctly uses the “bi”-prefixes.

A twelve core 3 GHz processor is also cheating you out of a 2.4 GiHz core by the same logic. It’s not actually 3 x 2^30 Hz.

The actual underlying storage is defined by elements which are either 1 and 0. Also there was several decades of time during which which both the people who actually invented and understood the tech used powers of 2 wherein the only parties using powers of 10 were business people trying to inflate the virtue of their product.

Just because the data is stored digitally doesn’t mean that it’s capacity has to be in powers of two. It just means the possible amount of different data that can be stored is a power of two.

Using SI prefixes to mean something different has always irked me as an engineer.

Also there was several decades of time during which which both the people who actually invented and understood the tech used powers of 2

For permanent storage or network transfer speeds? When was that? Do you have examples?

Instead we ended up with people using si and metric prefixes interchangably by waiting 50 years to change it in the worst of all possible worlds. Also I fucking hate the shitty prefixes they picked.

I believe OP was referring to the space lost after the drive is formatted. Maybe?

Yes.

When a drive is close to full capacity, those MBs lost due to units or secondary partitions from the format are the least of the worries. It’s time for an additional or larger drive, or look at what is taking so much space.

The difference between what the OS reports and the marketing info on the product (GBs of difference) is almost entirely due to the different units used, because the filesystem overhead will only be MBs in size.

Also the filesystem overhead is not lost or missing from the disk, you’re just using it

There is also reserve space for replacing bad sectors

True but not counted towards advetised capacity for SSDs

Bet even if you actually get the number of bytes from the raw block device, it won’t match that advertised number.

If it’s a SSD, it might. They are overprovisioned for wear-levelling, reducing write amplification, and remapping bad physical blocks.

I wouldn’t trust one off of Wish, but any reputable consumer brand will be overpovisioned by 2-8% of the rated capacity. For 1 TB, it would take an entire 9% to match the rated capacity in base-2 data units instead of SI units, but enterprise drives might reach or exceed that figure.

My 1TB and 12TB drives are 1000204886016 and 12000138625024 bytes respectively. Looks like between the two I got a whole 343 MB for free!

Does anyone ever actually use those prefixes? I’ve personally just couched the difference into bytes vs bits.

Bytes vs bits is a completely different discrepency that usually comes up with bandwidth measurements.

So there are: Kilobytes, Kibibytes, Kilobits, and Kibibits

Pretty much only when talking about the difference like here. 🙃

oh no

I’d love to know the technical reasoning for this. It probably has to do with clock frequency multipliers like when doing serial comms your baud needs to be pretty close to the spec but it’s pretty much never going to be exact.

I’ve only partially researched this, but I’m pretty sure it’s actually an ancient carry over from when NTSC changed to colour. Wikipedia says it switched from 60hz to 59.94 “to eliminate stationary dot patterns in the difference frequency between the sound and color carriers”. They just kind of kept doubling it and we ended up with these odd refresh rates, I’m guessing for NTSC TV compatibility.

Television is 29.97 fps.

Maybe your TV. Mines multilinghz

NTSC is? I don’t even remember this shit anymore lol

Someone’s never bought a 2x4

I hate that your right

My one frame every 50 sec is very important to me.

Blame NTSC

Good ol’ Never Twice the Same Color

I wonder why they like never compute these things correctly, it’s usually that you need to keep the reminder of last frames’ “hz” and add it to the next frames computation.

Or just wing it and round it.

That’s packed on backwards, before people even knew about KiB or it even existed(?) the drive companies pulled that scam that 1000B=1kB. So now it’s no longer a scam per se, just IMO misleading.