On the eve on Lemmy.zip turning one week old, and following requests from members of the community, I’m putting together a post to show how Lemmy.zip is being run, the resources taken up in the back-end and the general day to day issues I’ve come across and resolved.

Hardware

Firstly, the setting up of Lemmy.zip.

Lemmy.zip (the domain) was purchased on Google Domains (apparently now sold off to squarespace?), and I have the domain for two years.

Secondly, the cloud platform is Hetzner Cloud. The server is based in Falkenstein, Germany. The server “name” is CX21 - Its an intel CPU, with 2x vcpus, 4GB of RAM, a 40GB SSD and 20TB of outgoing traffic.

After setting up SSH, I followed the Ansible guide to set up Lemmy, which got the instance set up pretty much instantly. Great success… or so I thought!

Setup

The first thing I did was try federating with some other instances, which appeared to work straight away. Great. I tried some of the settings, added a logo, turned on user registration and email validation, added a site bio etc. Then I tried logging in and out. Oh no. I couldn’t log back in with the Admin account, because email verification was turned on and I hadn’t verified my email! Now, I know how to fix this via SQL commands, but at the time I had no clue. I also wasn’t receiving any emails from Lemmy.zip. After some googling, it turns out Hetzner blocks port 25, which is the default postfix port (postfix is the piece of software that handles emails). So now I was locked out with seemingly no way to get back in. Whoops. So I went and deleted the server and started from scratch. I reinstalled Lemmy, set up the instance again and made sure email verification was off this time. I posted in a few places about the server, and suddenly people were joining!

The next step was to get email working. I did try SendGrid for emails first, who immediately refused to verify me and still, a week later, have not replied to my support request.

Next I tried Mailersend, who had quite a complex validation but did finally approve me. Then I had to figure out how to get the server to send emails using the Mailersend details.

After a couple of hours of testing, it turns out you only need to add the details to the lemmy config.hjson file, and you don’t need to alter anything in the postfix container. I re-uploaded Lemmy, set email validation to true, and created a test account - boom! Email received.

For the most part, that was the first 24 hours done. I think by the end of the first day we had ~20 users, which I was really excited about.

Stats

As I’m typing this, we have 369 (nice) users, which is incredible. At one point, we even made it onto the recommended servers list however we’ve since been knocked off the list due to the uptime dropping after I’ve had to restart the server a few times. Still, another moment I was really proud of.

Most of the week has been quite smooth in all honesty, until today when I had a few reports that users couldn’t register or log in to their accounts. It took quite a while to track down the issue, as the logs were not really giving anything away. Finally, I saw a line in the log which said api_routes_websocket: email_send_failed: Connection error: timed out

(this literally took me 3 hours to find this one line - tip, set logging level to warn and not the default info if you want any chance of finding errors in a busy instance!)

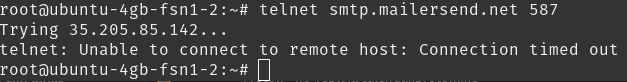

With a bit of googling, I was able to check my connection to the Mailersend smtp server and noticed that the telnet connection was timing out, which indicates that port 587 is being blocked somewhere in the chain. I have emailed Mailersend support but being a Saturday i’m not expecting a response for a while.

Before Mailersend stopped working, here is what the email stats look like:

You can see the expected tail off as Reddit opened back up, but then you can see emails drop to 0.

So with some frantic googling, I’ve switched (hopefully temporarily) to another provider, and emails are working again. Phew.

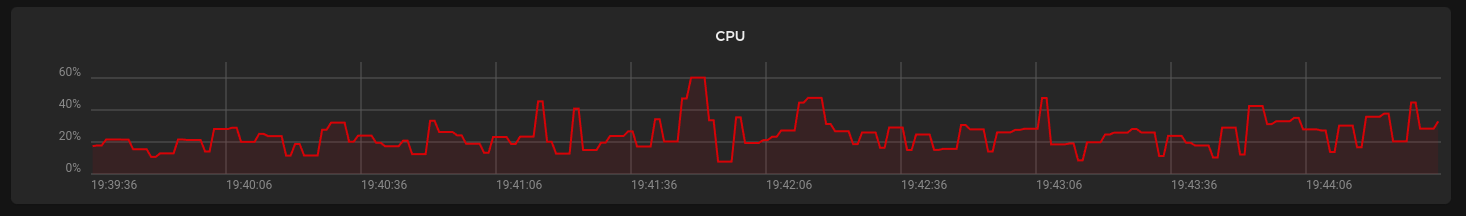

In terms of server performance, have some fancy graphs:

Server performance over the last week. The three big spikes relate to me doing something intensive on the server, rather than anything Lemmy is doing. Usage is mostly around 50% which is great, and gives us lots of growing room still.

Server performance over the last week. The three big spikes relate to me doing something intensive on the server, rather than anything Lemmy is doing. Usage is mostly around 50% which is great, and gives us lots of growing room still.

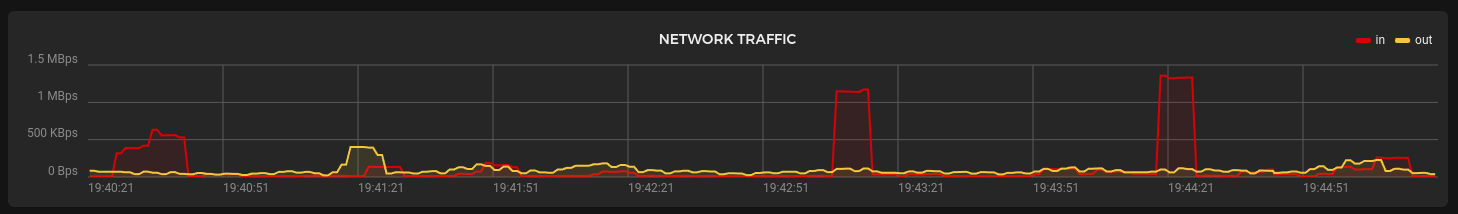

Here are some other graphs to look at:

Live server usage as I’m writing this

Live server usage as I’m writing this

Live network usage

Live network usage

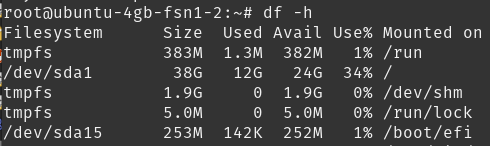

Storage space used:

Storage used out of the 40gb - 36% used.

There was an issue with the pictrs container (the software that uploads/manages photos) writing a log that was about 7gb in size before I noticed. That has been fixed with a rotating log file rather than one big file.

Other stuff

Thought I’d add some stuff I’ve liked from the last week. Firstly, if you haven’t already subscribed to the Starfield community and have an interest in the game, I urge you to do so.

A community I’ve quite enjoyed too is Dad jokes - definitely worth checking out if you want a laugh.

End

Hopefully thats been interesting for people - if there is anything you’d like to see more on, let me know. Happy to add more detail (where I can!)

Anyone is welcome to send me a message on here, I have no issues talking more privately. I have also set up an email address ([email protected]) for anyone who is locked out of an account or needs to share personal details and isn’t comfortable using this platform to do so.

I think the one thing i missed from that post was thanking the community from being here - would be rather quiet on my own!

In terms of this instance, I think we could stretch to 800 - 1000 users, assuming a couple of things:

Photos take up a lot of storage, so my main concern is hitting the 40gb cap. Storage can get expensive, so that needs taking into account. Keeping local communities small ties into that - photos, banners, logos etc are hosted by the instance the community was created on, so those storage costs would be elsewhere.

The devs have also said performance should be improved with the next release, so I’ve factored that in too.

I appreciate any donation suggestions. At the moment/current usage, the server is paid up for 12 months and I don’t want people to spend their hard earned money at this stage. I also feel I need a proper recognition process in place. I’m looking at setting up a thank you page for those who do donate in the future. At this stage I just would feel a bit guilty with no way to say thank you other than just saying thank you. I hope that makes sense!

40GB is a bit too low for having image hosting on the main instance. I would suggest considering a secondary VPS geared towards storage space. From a quick search I’ve seen most providers offer a few TB of storage for as much as a Netflix subscription. The optimal way would be a CDN, but I think it’s an overkill for the time being.

Yup fully agree, currently now sat at 44% storage usage, so looking at switching (probably to backblaze) in the near future to give time and space to make the move.

I need to upgrade the pict-rs database first, that looks to be relatively straightforward (fully automatic with any luck) and then I should be in a position to try migrating the data after a backup.