- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

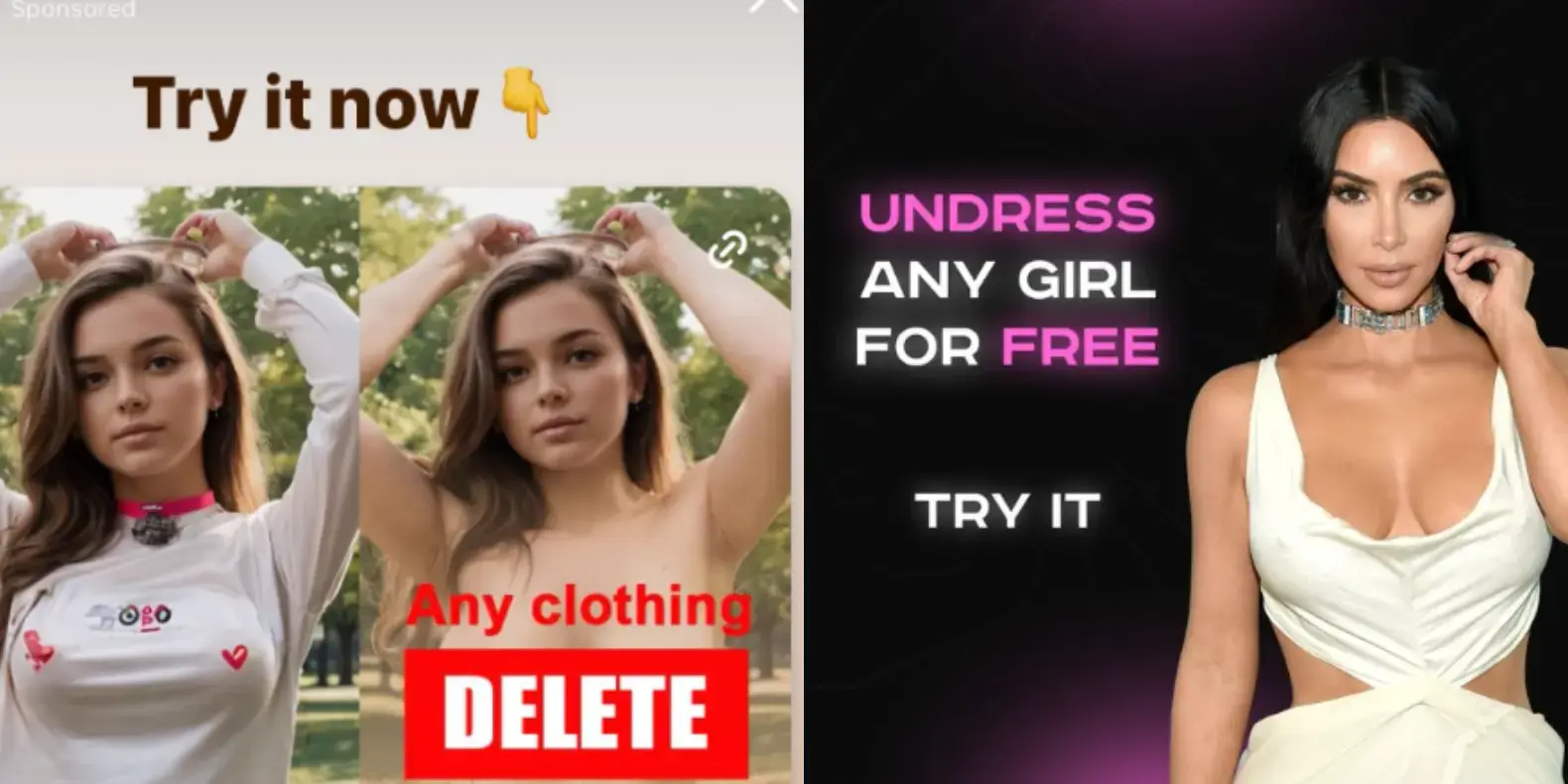

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

God damn I hate this fucking AI bullshit so god damn much.

god, I hate capitalism

You are free to invent a better system. So far, nobody has.

That’s highly subjective, but the fascinating book The Dawn of Everything argues otherwise. There are even parts about the anthropological evidence some peoples just up and changed systems every so often (yes, non-violently). Our problem as people in the modern era is many can’t imagine anything else, not that no one ever did.

What kind of economic system does the author of this book propose?

Unintentional Strawman misses the point.

A economy is but a subsystem to serve an organized society.

Not every society requires a economy, there are many ways to organize, the original foundational ideas go back to ancient greece. Read up about them.

The people with wealth and power have all the insensitive to keep things as they are. They own the planets resources, the means of productions. They loby or laws.

To think were waiting on one person to have “a better idea” for things to change is incredibly naive.

I don’t know how the system will change how the next one will look but the current one is mathematically not sustainable for another century.

It doesn’t. Graeber was an anthropologist and Wengrow is an archaeologist. It’s a review of existing evidence from past civilizations (the diversity of which most people are hugely ignorant about), making the case the most common representations of “civilization” and “progress” are severely limited, probably to a detrimental extent since we often can only base our conceptions of what is possible on what we know.

God, this argument. Its such history washing to insist that no other functioning system where people have been happy has existing. People cant even imagine life without capitalism.

Capitalism enforces itself. Its not pervasive because “its the best we can do”.

Define ‘better’. I’m not sure that word means what you think it means.

Less worse.

Surely in a liberal democracy enfettered capitalism is restrained by laws. I think a big problem we have now is a combination of regulatory capture & (sometimes AI generated) targeted, emotional, populist advertising by political brands.

Many people have suggested better systems. They just haven’t been implemented. And even if they hadn’t, people should still be allowed to criticise the current system, if only to get the discussion started on how to improve it.

I can’t help but wonder how in the long term deep fakes are going to change society. I’ve seen this article making the rounds on other social media, and there’s inevitably some dude who shows up who makes the claim that this will make nudes more acceptable because there will be no way to know if a nude is deep faked or not. It’s sadly a rather privileged take from someone who suffers from no possible consequences of nude photos of themselves on the internet, but I do think in the long run (20+ years) they might be right. Unfortunately between now and some ephemeral then, many women, POC, and other folks will get fired, harassed, blackmailed and otherwise hurt by people using tools like these to make fake nude images of them.

But it does also make me think a lot about fake news and AI and how we’ve increasingly been interacting in a world in which “real” things are just harder to find. Want to search for someone’s actual opinion on something? Too bad, for profit companies don’t want that, and instead you’re gonna get an AI generated website spun up by a fake alias which offers a "best of " list where their product is the first option. Want to understand an issue better? Too bad, politics is throwing money left and right on news platforms and using AI to write biased articles to poison the well with information meant to emotionally charge you to their side. Pretty soon you’re going to have no idea whether pictures or videos of things that happened really happened and inevitably some of those will be viral marketing or other forms of coercion.

It’s kind of hard to see all these misuses of information and technology, especially ones like this which are clearly malicious in nature, and the complete inaction of government and corporations to regulate or stop this and not wonder how much worse it needs to get before people bother to take action.

Flat earthers on the rise. I can only trust what i see with my eyes, the earth is flat!

How will this affect the courts? How can evidence be trusted?

what

I believe Tim means to say that the spread of misinformation can be linked to the rise of Flat Earthers. That if we can only trust what we see before us, and we see a flat horizon, we can directly interpret this visual to mean that the Earth is flat. Thus, if we cannot trust our own eyes and ears, how can future courtroom evidence be trusted?

“Up to the Twentieth Century, reality was everything humans could touch, smell, see, and hear. Since the initial publication of the chart of the electromagnetic spectrum, humans have learned that what they can touch, smell, see, and hear is less than one-millionth of reality.” -Bucky Fuller

^ basically that

Exactly

Bucky Fuller

Now I know the source of that sample in the Incubus song New Skin. I’ve been curious about that for two decades. Thanks!

What’s the use of autonomy when a button does it all?

Time to go back to books for information.

I don’t know about you, but I started to notice that not everything that was printed on paper was truthful when I was around ten or eleven years old.

Ok, but acting like you can’t trust any sources of info on anything is pretty destructive also. There are still some fairly reliable sources out there. Dismiss everything and you’re left with conspiracy theories.

In the long run everything might be false, even your own memory changes over time and may be affected by external forces.

So, maybe there will be no way to tell the truth except when experiencing it firsthand, and we will once again live like ancient Greeks, pondering about things.

To be fair, I hope that science and critical thinking might help to distinguish what is true or not, but that would only apply to abstract things, as all the concrete things might be fabricated

I don’t remember saying all books were good. But it’s often possible to find well edited and curated material in print.

There are already AI-written books flooding the market, not to mention other forms of written misinformation.

They still seem relatively easy to filter out compared to the oceans of SEO nonsense online. If you walk into a library you won’t be struggling to find your way past AI crap.

The flood of AI generated anything is really annoying when looking for an actual human created source.

Thank god Amazon only allows bots to publish 3 books per day. They saved humanity!

I think AI might, eventually, stop people from posting their entire lives.

Not having a ton of data floating around about your looks, your voice, that’s the only way to protect yourself from ai generated shit.

What if one day those people doctoring images like that should have a fake doctored profile photo of themselves on a dating app. Ai writes a tailored bio for victim to swipe on should that victim be on a dating app. If the match is made, the profile adds both the perps Ai doctoring attempt on the victim and the real face of the perp.

I think people are going to get very good at identifying fakes. Only is older people will be tricked.

This one’s fixable. Just hold Meta accountable. It should be illegal they don’t manually filter ads when submitted.

It makes me sick to my stomach. Stay safe, everyone

Why am I not surprised

I would’t have thought to train an LLM to create nude but say nothing about that when presenting it to Apple for App Store review only to then advertise that feature elsewhere. Damn.