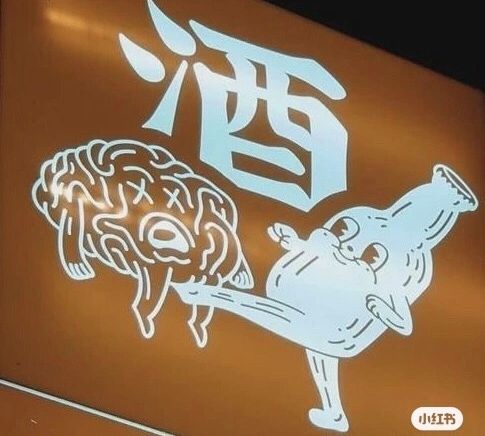

it is fucking priceless that an innovation that contained such simplicities as “don’t use 32-bit weights when tokenizing petabytes of data” and “compress your hash tables” sent the stock exchange into ‘the west has fallen’ mode. I don’t intend to take away from that, it’s so fucking funny

This is not the rights issue, this is not the labor issue, this is not the merits issue, this is not even the philosophical issue. This is the cognitive issue. When not exercised, parts of your brain will atrophy. You will start to outsource your thinking to the black box. You are not built different. It is the expected effect.

I am not saying this is happening on this forum, or even that there are tendencies close to this here, but I preemptively want to make sure it gets across because it fucked me up for a good bit. Through Late 2023–Early 2024 I found myself leaning into both AI images for character conceptualization and AI coding for my general workflow. I do not recommend this in the slightest.

For the former, I found that in retrospect, the AI image generation reified elements into the characters I did not intend and later regretted. For the latter, it essentially kneecapped my ability to produce code for myself until I began to wean off of it. I am a college student. I was in multiple classes where I was supposed to be actively learning these things. Deferring to AI essentially nullified that while also regressing my abilities. If you don’t keep yourself sharp, you will go dull.

If you don’t mind that or don’t feel it is personally worth it to learn these skills besides the very very basics and shallows, go ahead, that’s a different conversation but this one does not apply to you. I just want to warn those who did not develop their position on AI beyond “the most annoying people in the world are in charge of it and/or pushing it” (a position that, when deployed by otherwise-knowledgeable communists, is correct 95% of the time) that this is something you will have to be cognizant of. The brain responds to the unknowable cube by deferring to it. Stay vigilant.

Honestly, overuse of AI in the West is likely going to compound our societal incompetence and accelerate our decline

Hell yeah

Yea, it gets said about every generation with a new technology going back to writing, but I really feel like this is the one that will actually sink us.

Every other technology going back to writing still has a learning feedback loop. This particular technology interrupts the learning process and replaces it with what we currently call prompt engineering - a circular self-contained process that is limited by the user’s existing level of learning, does not add to the user’s level of learning, and explicitly prevents the user from doing the learning.

Possibly even the downfall of the elites in the global south as well with them likely following the west and over relying on it

Can this AI power a smart speaker that can actually reliably manage timers and alarms, manage my lights, play music, convert units, and find my TV remote? No?

That’s literally all I’ve ever wanted out of any of this and nobody has been able to pull it off. Every single option is awful, takes like 10 seconds to respond for no reason other than to upload my whole shit directly to the NSA, and has maybe a 1 in 3 chance of being so comically wrong that it’s a nuisance.

“Alexa, set a 5 minute tea timer.” “Buying 5 kilograms of Earl Grey Tea on Amazon.” “NO NO NO NO”

“Hey Google, what’s 1/4 cup in ml?” “Playing the Quarter Life Crisis podcast on Spotify.” “NO NO NO NO”

“Buying 5kg of Earl Grey TNT on the darknet” “Hahaha, YES YESS.”

Delivering 5tons of Earl Grey in T-MINUS 5 seconds.

“WAIT NO LIKE THIS ALEXA NO PLEASE” “I’m sorry Chud, your order has been fulfilled.”

longmont potion castle ass AI: Our driver is already en route to you, sir. I am looking right here at your digital signature.

I’ve got hardware ready to go, but trying to figure out proxmox has me like

If it’s any consolation, I have never used proxmox for it. I’m rawdogging docker on an Ubuntu server

Just as god intended.

Tbf I do the same with my jellyfin server

Cute tortle

Still needs the voice recognition that responds to “computer?”

Home assistant has that, and with some knowhow you can change it to whatever you want. My assistant is named Sorcha and has a Scottish accent.

I’ve wondering about dicking with this. Assume I’m somewhat lazy, approximately technically competent but a slow worker, and prone to dropping projects that take more than a week.

Would I be able to make something for the kitchen which can like:

- Set multiple labelled timers, and announce milestones (e.g. ‘time one hour for potatoes, notify at half an hour’, ‘time 10 minutes for grilling’)

- Be fed a recipe and recite steps navigating forward and backwards when prompted e.g. ‘next’ ‘last’ ‘next 3’ etc

- Automatically convert from barbarian units

- email me notes I make at the end of the day (like accumulate them all like a mailing list)

Or is this still a pipe dream?

The answer is that it will take work, but not as much as you may think. And some of the especially niche things may not be possible, I’ll address them one by one.

Multiple labelled timers

Yes!

Announce milestones

I don’t think so, but you can just set two timers. Timers are new, so that may be a feature in the future.

Be fed a recipe

I have never tried this, but it does integrate with grocy, so maybe.

Automatically convert units

Officially, probably not, but I have done this before and it has worked just by asking the llm assistant.

Email me notes at the end of the day.

I have never done this, and it would take some scripting, but I am willing to bet that it can be done. Someone might have a script for it in the forums.

Ultimately, home assistant is not an all-in-one solution. It is a unified front end to connect smart home devices and control them. Everything else requires integrations and add-ons, of which there are many. There are lots of tools for automation that don’t require scripting at all, and if you’re willing to code a little it becomes exponentially more powerful. I love it, it helps with my disability, and I build my own devices to connect to it. Give it a shot if you have some spare hardware, So to do what you want, You will need a computer, a GPU at least a RTX 3060, and a speaker phone of some kind.

I can code at a basic level. Like I can make and ship a webapp with some tears.

Running a server with a GPU is probably a bit high cost. The build and electricity (~50c a kW hour).

I wasn’t aware running voice recognition cost so much in ?vector calculations? I remember trying some Google thing at some point and finding it utterly hilarious that they thought it was ready for home use since you couldn’t script cues yourself and they seemed to have a very 20 something American man view of what working in the kitchen entails. No real capacity for making multiple things at once, tweaking recipes and saving them, etc

The GPU is for the llm, voice recognition is easy for any modern pc. You don’t need to use an llm, but it does give you some flexibility in the commands you can give it. Without an llm, home assistant can only use sentence matching. Sentence matching in HA is pretty good, don’t get me wrong, but llm’s are a level above.

The home assistant scripting language is just yaml, really easy syntax.

Try MyCroft AI

I just want a dictation and reminder engine that I can talk to in nearly any situation pleeeeease thats all.

The people in Dune were about this one

I’m gonna ask my AI what I should do about this.

“A strange game. The only winning move is not to play.”

i’ve yelled at a couple cs students about learning things for real and they’ve at least stopped telling me about it

it’s tolerable to use it to get a lead on something you don’t understand well enough to formulate search terms but you have to do extra work to verify anything it spits out

This is the cognitive issue. When not exercised, parts of your brain will atrophy. You will start to outsource your thinking to the black box. You are not built different. It is the expected effect.

Tools not systems

AI coding for my general workflow. I do not recommend this in the slightest.

Yes, I use stack overflow like God intended.

Always has been

I’ve run some underwhelming local LLMs and done a bit of playing with the commercial offerings.

I agree with this post. My experiments are on hold, though I’m curious to just have a poke around DeepSeek’s stuff just to get an idea of how it behaves.

I am most concerned with next generation devices that come with this stuff built in. There’s a reactionary sinophobe on youtube who produced a video with some pretty interesting talking points that, since the goal is to have these “AI assistants” basically observe everything you do with your device (and are blackboxes that rely on cloud hosted infrastructure) that this effectively negates E2E encryption. I am convinced by these arguments and in that respect the future looks particularly bleak. Having a wrongthink censor that can read all your inputs before you’ve even sent them and can flag you for closer surveillance and logging, combined with the three letter agencies really “chilling out” about eg Apple’s refusal to assist in decrypting iPhones, it all looks quite fucked.

There are obviously some use cases where LLMs are sort of unobjectionable, but even then, as OP points out, we often ignore the way our tools shape our minds. People using them as surrogates for human interaction etc are a particularly sad case.

Even if you accept the (flawed) premise that these machines contain a spark of consciousness, what does it say about us that we would spin up one-time single use minds to exploit for a labor task and then terminate them? I don’t have a solid analysis but it smells bad to me.

Also China’s efforts effectively represent a more industrial scale iteration of what the independent hacker and opensource communities have been doing anyway- proving that the moat doesn’t really exist and that continuing to try and use brute force (scale) to make these tools “better” is inefficient and tunnel visioned.

Between this and the links shared with me recently about China’s space efforts, I am simply left disappointed that we remain in competition and opposition to more than half of the world when cooperation could have saved us a lot of time, energy, water, etc. It’s sad and a shame.

I cherish my coding ability. I don’t mind playing with an LLM to generate some boilerplate to have a look at, but the idea that people who cannot even assess the function of the code that is generated are putting this stuff into production is really sad. We haven’t exactly solved the halting problem yet have we? There’s no real way for these machines to accurately assess code to determine that it does the task it is intended to do without side effects or corner cases that fail. These are NP-hard problems and we continue to ignore that fact.

The hype driving this is clear startup bro slick talk grifting shit. Yes it’s impressive that we can build these things but they are being misapplied and deferred to as authorities on topics by people who consider themselves to be otherwise Very Smart People. It’s… in a word… pathetic.

Between this and the links shared with me recently about China’s space efforts, I am simply left disappointed that we remain in competition and opposition to more than half of the world when cooperation could have saved us a lot of time, energy, water, etc. It’s sad and a shame.

The gigawatts of wasted electricity :(

We can only laugh or we’d never stop crying.

I was surprised the response wasn’t “okay, China made this on 1/50 the budget, so if we do what they did but threw double our budget at it, we can make something 100 times better, and we’ll be so far advanced that we’ll be opening Walmarts on Ganymede next spring, we just need more Quadros, bro”

I’m excited that another country is continuing to research AI and LLMs without burning the planet to the max degree. The technology might have better uses in the future but commercializing it now, especially to the ridiculous degree we see in the US (why does my fridge need chatgpt?) Is absolute folly if not a war crime on all future generations who will suffer from the unnecessary emissions

Luckily ChatGPT and deepseek can’t plumb worth a fuck so I’m not too worried about it coming for my job.

Until robots develop the dexterity to crawl under a home, isolate broken plumbing, repair and test after, my job will be human only.

Honestly, plumbers stay winning. I do not recall a point in my medium-length life in which plumbers have not had more work than they can handle and have been able to live comfortably. Until DPRK exports butthole removal tech / juche magic, plumbers will continue to be the best.

LLMs could never get the drug use correct in order to work construction anyway

It may be a petty thing, but I hate how people rely on AI programs to make pfp’s and thumbnails. I’d rather get a shitty crayon drawing that you even put thirty seconds into.

The only ethical use of AI was when DougDoug made it try to beat Pajama Sam.

i’ve been feeling kinda wary of dougdoug lately bc he’s been saying some pretty cringe ai-bro things

that video is very funny though

this is me with google maps

Someone posted some studies on an earlier AI article that showed people’s ability to navigate based on landmarks and such was way worse if they relied on GPS. So what OP said tracks as far as skills regressing.

I don’t miss printing Mapquest directions out on paper before leaving onto a cross-state trip though.

Look I can’t navigate worth shit in a car and I never will. Give me a map and a compass and I can orienteer through the wilderness, but put me in a car and I’ll get lost in a low density neighborhood.

There is “use the machine to write code for you” (foolish, a path to ruin) and there is “use the machine like a particularly incompetent coworker who nevertheless occasionally has an acceptable idea to iterate on”.

If you are already an expert, it is possible to interpret the hallucinations of the machine to avoid some pointless dead-end approaches. More importantly, you’ve had to phrase the problem in simple enough terms that it can’t go too wrong, so you’ve mostly just got a notebook that spits text at you. There’s enough bullshit in there that you cannot trust it or use it as is, but none of the ego attached that a coworker might have when you call their idea ridiculous.

Don’t use the machine to learn anything (it is trained on almost exclusively garbage), don’t use anything it spits out, don’t use it to “augment your abilities” (if you could identify the augmentation, you’d already have the ability). It is a rubber duck that does not need coffee.

If your code is so completely brainless that the plagiarism machine can produce it, you’re better off writing a code generator to just do it right rather than making a token generator play act as a VIM macro.

don’t use it to “augment your abilities” (if you could identify the augmentation, you’d already have the ability

I actually disagree with this take. I can work fine without LLMs, I’ve done it for a long time, but in my job i encounter tasks that are not production facing nor do they need the rigor of a robust software development lifecycle such as making the occasional demo or doing some legacy system benchmarking. These tasks are usually not very difficult to do but the require me writing python code or whatever (i’m more of a c++ goblin) so I just have whatever the LLM of the day is to write up some python functions for me and i paste them into my script that i build up and it works pretty well. I could sit there and search about for the right python syntax to filter a list or i can let the LLM do it because it’ll probably get it right and if it’s wrong it’s close enough that I can repair it.

Anyway these things are another (decadently power hungry) tool in the toolbag. I think it’s probably like a low double digit productivity boost for certain tasks I have, so nothing really as revolutionary as the claims are being made about it, but I’m also not about to go write a code generator to hack together some python i’m never going to touch again.

Generating Python is a special case, first because there’s so god damn much of it in the training data and second because almost any stream of tokens is valid Python. 😉

The code generator remark is particularly aimed at the Copilot school of “generate this boilerplate 500 times with small variations” sludge, rather than toy projects and demo code. I do think it’s worth setting a fairly high baseline even with those (throwaway code today is production code tomorrow!) to make it easy to pick up and change, but I cannot begrudge anyone not wanting to sift through Python API docs.