unpopular opinion: humans are a deeply mimetic species, copying is our very essence and every limitation to it is entirely unnatural and limiting human potential.

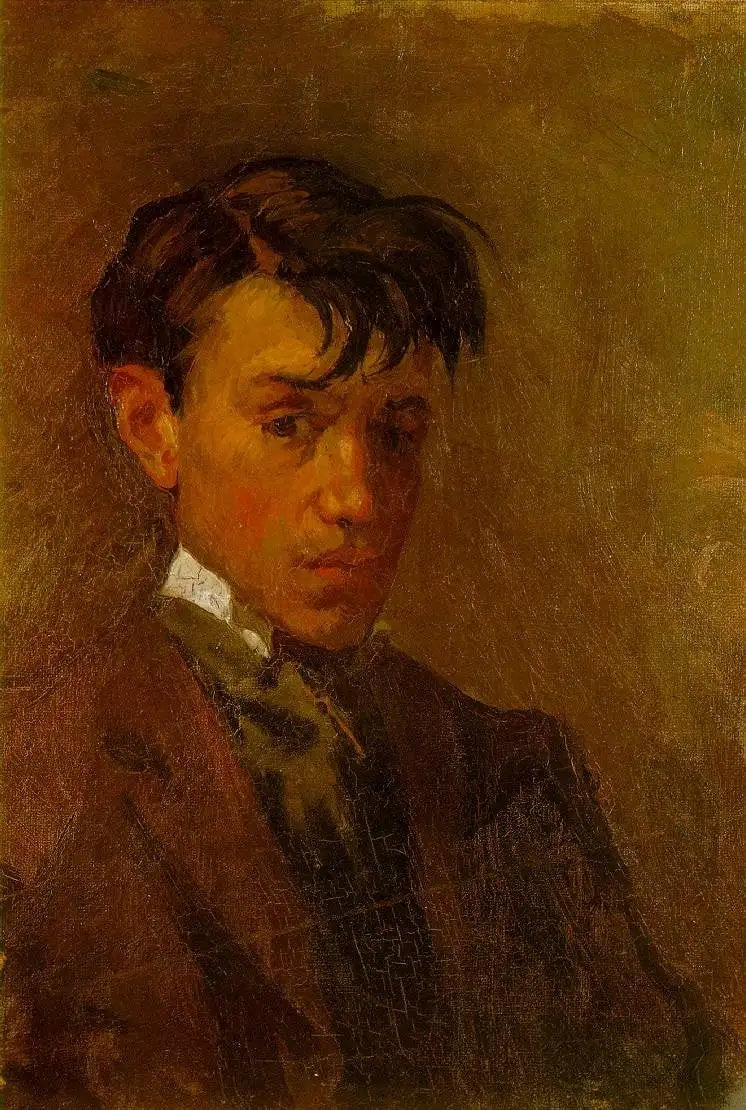

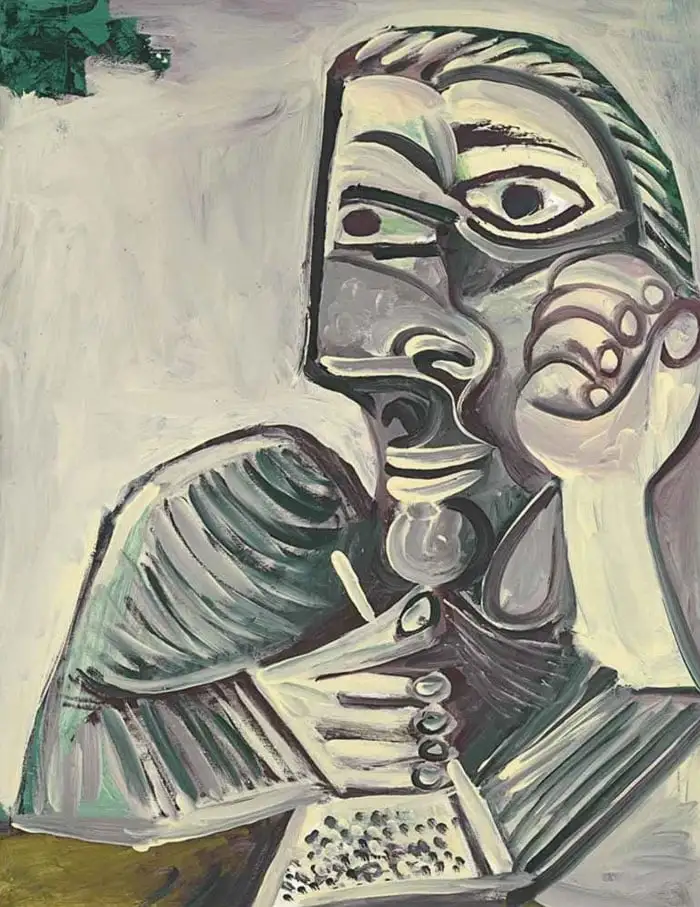

Art live by imitation/ispiration AND reinterpretation of previous works. All the great artists study their predecessors first, THEN they create their own style.

Picasso selfpotrait 1896

Vs 1971

Al is just a fucking copy-past blender

Yes, even when people copy eachother they don’t have the same output. And some individuals are mighty excentric, for instance Picasso. But most people stick almost entirely to what they see and only differentiate by means of the mistakes they make, not by intended originality. From the moment people are born they start copying everything they see. With a head full of mirror neurons we tend to live our lifes exactly the same, and the differences only stand out because they’re relative. From a distance we would all look, behave, be more or less the same. Copyright should be abolished. I’m all in favor of supporting artists and creators, support whoever you will out of free will, but don’t limit others freedom to copy you. If we can’t copy what others have done before us, then our culture is not free. It should be an honor to be copied, that means others like your idea and want to use it too. That’s how humans have always lived, that’s how we progress. it’s what has brought us this far. Let’s continue without bizarre copying limitations. If we can copy freely that means culture is free, it means we can learn from eachother, take eachothers ideas and creations, put them to use and expand upon them, sometimes inadvertently while trying to make an exact copy. This freedom will be to the benefit of us all, and the opposite is true aswel, intelectual property is to the detriment of us all.

If you don’t want your work to be used by others, keep it private. Don’t show it to anyone. Keep your invention in your cellar and let nobody enter. If you want to share your ideas and creations, please do so. But you can’t have your cake and eat it too. You can’t show what you’ve made and expect others to not use it as input and put it to use.

OpenAI when they steal data to train their AI: 😊🥰

OpenAI when their data gets stolen to train AI: 🤯😡🤬

They stole it first fair and square

It’s just the British museum all over again.

“We’re not done looking at it!”

At the point where it becomes possible to copy the entire British museum and hand it out to anyone who wants one, maybe it starts to be a good idea to do exactly that…

I made this.

The big difference is Deepseek is open sourced, which ALL of these models should be because they used our collective knowledge and culture to create them.

I like AI but the single biggest issue is how it is being gated off and abused by Capitalists for profit (It’s kind of their thing).

Open-weighted*

Can you elaborate on what you mean, for a layman?

In parallel to what Hawk wrote, AI image generation is similar. The idea is that through training you essentially produce an equation (really a bunch of weighted nodes, but functionally they boil down to a complicated equation) that can recognize a thing (say dogs), and can measure the likelihood any given image contains dogs.

If you run this equation backwards, it can take any image and show you how to make it look more like dogs. Do this for other categories of things. Now you ask for a dog lying in front of a doghouse chewing on a bone, it generates some white noise (think “snow” on an old TV) and ask the math to make it look maximally like a dog, doghouse, bone and chewing at the same time, possibly repeating a few times until the results don’t get much more dog, doghouse, bone or chewing on another pass, and that’s your generated image.

The reason they have trouble with things like hands is because we have pictures of all kinds of hands at all kinds of scales in all kinds of positions and the model doesn’t have actual hands to compare to, just thousands upon thousands of pictures that say they contain hands to try figure out what a hand even is from statistical analysis of examples.

LLMs do something similar, but with words. They have a huge number of examples of writing, many of them tagged with descriptors, and are essentially piecing together an equation for what language looks like from statistical analysis of examples. The technique used for LLMs will never be anything more than a sufficiently advanced Chinese Room, not without serious alterations. That however doesn’t mean it can’t be useful.

For example, one could hypothetically amass a bunch of anonymized medical imaging including confirmed diagnoses and a bunch of healthy imaging and train a machine learning model to identify signs of disease and put priority flags and notes about detected potential diseases on the images to help expedite treatment when needed. After it’s seen a few thousand times as many images as a real medical professional will see in their entire career it would even likely be more accurate than humans.

The neural network is 100s of billions of nodes that are connected to each other with connections of different strengths or “weights”, just like our neurons. Open source weights means that they released the weight of connections between the nodes, the blueprint of the neural network, if you will. It is not open source because they didn’t release the material that it was trained on.

Thanks.

Are there any models that are truly open source where they have shown the datasets it was trained on?

Not that I know of

It is not open source because they didn’t release the material that it was trained on.

I’m not sure if I’m missing a definition here but open source usually means that anyone can use the source code under some or no conditions.

You can’t use the source code, just the neural network the source code generated.

deleted by creator

I’m pretty sure open source means that the source code is open to see. I’m pretty sure there is open source things that you need to pay to use.

An LLM is an equation, fundamentally. Map a word to a number, equation, map back to words and now llm. If you’re curious write a name generator using torch with an rnn (plenty of tutorials online) and you’ll have a good idea.

The parameters of the equation are referred to as weights. They release the weights but may not have released:

- source code for training

- there source code for inference / validation

- training data

- cleaning scripts

- logs, git history, development notes etc.

Open source is typically more concerned with the open nature of the code base to foster community engagement and less on the price of the resulting software.

Curiously, open weighted LLM development has somewhat flipped this on its head. Where the resulting software is freely accessible and distributed, but the source code and material is less accessible.

Yeah. That is true.

I wouldn’t say it’s the biggest issue. Even if access was free, we’d still have to contend with the extreme energy use, and the epistemic chaos of being able to generate convincing bullshit much quicker than it can be detected and flagged.

I think it’s a harmful product in general. We’re polluting our infosphere the same way we polluted our ecosphere, and in both cases there’s still folks who think “unequal access to polluting industries” is the biggest problem.

The energy use isn’t that extreme. A forward pass on a 7B can be achieved on a Mac book.

If it’s code and you RAG over some docs you could probably get away with a 4B tbh.

ML models use more energy than a simple model, however, not that much more.

The reason large companies are using so much energy is that they are using absolutely massive models to do everything so they can market a product. If individuals used the right model to solve the right problem (size, training, feed it with context etc. ) there would be no real issue.

It’s important we don’t conflate the excellent progress we’ve made with transformers over the last decade with an unregulated market, bad company practices and limited consumer Tech literacy.

TL;DR: LLM != search engine

All the data centers in the US combined use 4% of the electric load, and one of the main upsides to deepseek is that it requires much less energy to train (the main cost).

You’re right about this. I was commenting in the context of “intellectual property”.

we’d still have to contend with the extreme energy use,

Meanwhile people running it on Raspberry PI: “I made it consume 1W less, which is 30% improvement!”

and the epistemic chaos of being able to generate convincing bullshit much quicker than it can be detected and flagged.

It’s been this way long before modern AI.

The infosphere already turned to shit over 10 years ago when the internet started consolidating towards a few super large companies.

I love that the anti-AI crowd is struggling to update their talking points after DeepSeek has made most of them irrelevant.

You’re welcome to scroll my comment history to about 6 months ago where I was using the phrase “informational equivalent of Kessler Syndrome”, or talking about accurate attribution and faithful replication as being useful effects of the current copyright regime even if the rest of it is garbage.

Wow, you really have your head deep in something. I’m not sure if it’s deep in the sand, or deep up Sam Altman’s ass.

You think people have “talking points” against bullshit fountains, as if they’re needed? You think it’s a struggle to come up with reasons why they’re bad? The truth is that they’re absolutely useless at best, and actively harmful at worst. And no, this isn’t about the monstrous amounts of energy they use. It’s that they offer nothing of value.

“Gee whiz, thanks to this bullshit fountain I can research legal cases much more efficiently!” Oops, turns out the bullshit fountain just made up those precedents and now the judge is furious at me.

“Apple Intelligence will just summarize my texts for me!” Oops, the summaries were so wrong that they were actively harmful, and now Apple has been forced to turn off that feature.

“I’ll just have the LLM generate code for me!” Oops, now I have to spend a week debugging because the perfectly plausible code that was generated has a subtle logic error.

“Let me just search the web for something. Aha, I won’t be taken in by that AI summary at the top because I know that’s unreliable bullshit from the bullshit fountain. I’ll just scroll past that and click on the actual web pages.” Oops. Those actual web pages are now LLM-generated and filled with bullshit. I guess now I have to stop using the web and rely on printed encyclopedias from the time before LLMs to actually get verifiable facts. Winning!

No, I’m a socialist. I’m against big tech and the rich in general. But I don’t let my bias cause me to latch onto every criticism blindly. Even now, we’re talking about DeepSeek, which is the furthest thing from pro-big tech and also much more energy efficient, but you’re still deflecting back to your generic and irrelevant criticisms to try to apply them to this.

Your examples give away that you don’t have significant experience with AI. You know about Altman claims that have been debunked publicly, but obviously not much personal experience that would give you more than a superficial understanding of the basics beyond that.

you don’t have significant experience with AI

I have enough experience to know it’s utterly useless. If you keep looking for more experience once you’ve realized that, you’re in a cult.

Cool dude. Keep your head buried in the sand, and continue refusing to keep up with new technology. Surely it won’t backfire.

Ha! You think AI is “keeping up with technology”? It’s not, it’s a diversion. Technology continues to advance, but this sideshow has nothing to do with it. You keep on drinking that kool-aid. Surely there will be a use for the bullshit fountain, any day now!

Artists use our collective knowlege and culture in the same way. Its just some of them are whiny and complain when ai does their job faster and cheaper.

I am an artist & I agree, actually.

I do think it’s problematic that corpos are using AI to replace working artists, although that’s a systemic issue affecting a lot of disciplines.

That said, and I will get hate for this, there is a case to be made that if artists were more creative and interesting in general, they wouldn’t be so easily displaced by AI slop.

Yeah I mean faster and cheaper does not mean more creative.

Exactly. DeepSeek Mr Bean is shading the answers with everyone. Not hoarding them for his own gain.

Artists do the same shit and demand money for it.

Demand money for their work? THE AUDACITY OF THEM BITCH, THEY SHOULD WORK FOR FREE.

Artists create original content.

All art is derivative.

No. They create derivations of content they consumed prior. Most of them will even post their “inspirations” along with their derivations. And no one gives a shit.

Other people have used the words you wrote in other contexts before, therefore you stole their words.

Oh God, so did I! I’m doing it right now! I’m going to jail!

If is shit, then stop consuming it. (no movies, no comics, no series, no books, NO ART)

OR

If is worthless then anyone can easily do it. Just create that yourself, BUT without using a program who steal and mix-up previous artworks. You don’t need that, right?

But ofk you work for free, right?

I don’t really. I don’t go to or buy any of those. Haven’t in decades. “Artists” like to think WAAAAAAY to highly of themselves and their work.

I don’t consume “art” from people who complain about AI “art” as I don’t buy furry commissions.

There’s a fundamental difference between a person learning from other people, and machines learning from other people, because the latter can be copied infinitely. One person can only generate so much output, while the machine can be scaled horizontally.

Irrelevant Human exceptionalism. Just because something is copyable doesn’t inherently make it worse. Just as something being difficult to obtain doesn’t make it inherently better.

“Irrelevant human exceptionalism”? Sorry I don’t want creatives to starve while a few rich people get ever richer.

Artists demanding money: “YOU BETTER PAY ME FOR THIS STUFF OR ELSE I’M GONNA… gonna… Idk, keep making it for free I guess?”