Hello all, and welcome to the April 2024 Server update.

What a busy month we’ve had! I hope you enjoyed the April fools IPO announcement. Obviously, we’re not going public.

New Communities

We’ve had a few new communities pop up, please check them out and subscribe if you like them!

- Weird West

- Squared Circle - Welcome to all the Kbin refugees!

Server Updates

What. A. Month.

Following our previous server upgrade a little while back, we’d seen that performance was steadily decreasing and the site performance was slowing down too, sometimes taking seconds to load a page.

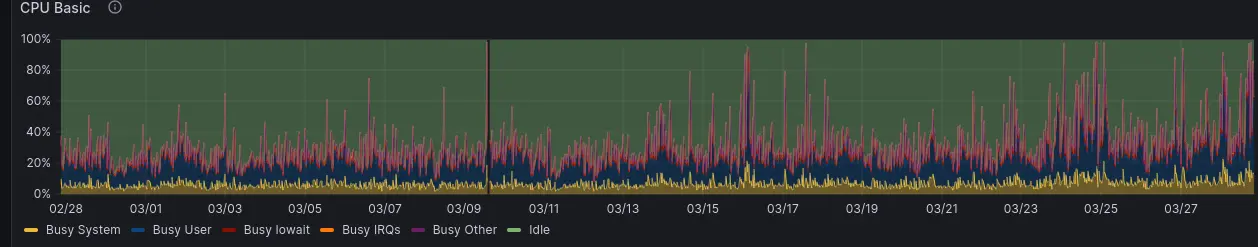

This is what I was seeing on the 27th March:

As you can see, all red = not good.

I first tried offloading all secondary services to a second server (like the mobile/old UIs and some of the admin tools we run) which unfortunately didn’t have much of an impact overall - the server just wasn’t able to handle all the traffic and database operations anymore.

For reference, the server was a 4 core (vcpu’s - so 4 threads) Virtual dedicated server with 16gb of RAM (Hetzner CCX23). In some form this was still the original server we started Lemmy.zip on, just rescaled through Hetzner’s platform. At this point, rescaling it up again just wasn’t financially viable, as the performance to price benefit stopped being in favour of cloud servers, and now tipped over to proper dedicated servers.

So, after researching the best long-term solutions I settled on Hetzner’s EX44 dedicated server. It has 12 cores (20 threads!) and 64GB of RAM.

It is a beast.

This is the comparison performance as I type this:

This should give us plenty of growing room as the fediverse continues to expand.

Other things that happened in March include ZippyBot properly breaking, and dealing with a large spam wave hitting the Lemmy network.

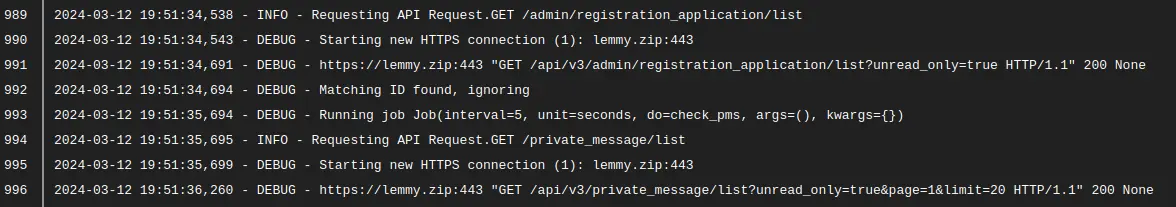

ZippyBot decided it just didn’t want to work anymore, at which point I realised that due to my own poor programming I hadn’t put enough detail in Zippy’s logs, which look a bit like this:

We use Zippy a lot, like sending welcome messages to new users, background moderation stuff, stopping spam users etc. So having Zippy not working was not ideal.

The problem was that Zippy wasn’t throwing any errors, it was just stopping and not restarting, seemingly randomly. During the initial debug phase, I implemented a docker healthcheck into Zippy (another new thing I had to learn how to do!) and used another container to keep restarting Zippy if the the healthcheck came back unhealthy.

Finally, it turned out that the RSS feature built into Zippy for community mods was failing when the URL it reached out to for the feed didn’t exist anymore. One of the RSS feeds had changed their URL and not put a redirect in, so Zippy was hitting a 404 error and failing silently. There is a check when the RSS feed is set up to make sure the feed exists, but not one after that. Lesson learned.

The Lemmy network saw a large spam wave attack from the EvilToast instance, which was resulting in multiple accounts sending thousands of comments/posts a minute and overwhelming some instances. To get around this, I temporarily defederated from EvilToast for the period of the attack, until their admin confirmed they had implemented a captcha and stopped completely open signups. Instances that allow completely open signups are usually targeted by spammers due to the easy way they can create so much traffic. Hopefully new instances being created into the future will learn from this and enforce at least some method of validating their users.

As promised, here is a breakdown of the server move process. If you don’t care about sysadmin stuff, you can safely skip this next bit.

Making the move to the new server was a new experience for me (If you weren’t aware, I am not a sysadmin by trade and am learning as I go!) so the day of the move was a bit nerve wrecking with visions of accidentally losing the database forever. This is the rough order of steps I took during the maintenance window.

First, I backed up the old server using Hetzner’s very handy cloud backup tool. That took about 10 minutes, after which I changed the nginx config to point away from lemmy itself. It was meant to point to the status site but i misconfigured the nginx file and instead it sent traffic to Authentik (our admin SSO solution to lock down our backend services). Oh well, this was the only thing that went wrong thankfully so I’ll take that.

I stopped all the docker containers on both servers (main lemmy server and secondary UI server) and then compressed the postgres database files. While this was happening, I recreated the folder structure on the new server, and spun up Portainer, and then the Lemmy services stack so that the files/configs needed were generated and all in the right place, before shutting lemmy back down until everything was ready.

I then rsynced the database between both servers, and decompressed it in the correct place on the new server. All in all this took about an hour, mostly waiting for the files to compress/decompress. At this point I needed to change the DNS records to point to the new server, so I could request all the SSL certs for the required domains. This is when I took the old server offline and changed the DNS records in Cloudflare to point to the new IP.

Once I had requested the SSL certs, I then created the nginx config files for lemmy and some of the important subdomains (i.e. the mobile site) at which point everything was in place for lemmy to be turned back on. After a very tense couple of minutes (and a few container restarts!) lemmy.zip finally came back to life just as it had been left, and pretty quickly I could see federation was working as we started to pull new posts through.

Next up was fixing pictrs as images weren’t loading - that service has a real issue with memory requirements and was stuck in a boot loop, so giving it access to 10 gigs of memory (a bit overkill admittedly) brought it back to life. And we were back!

The next hour or two was spent re-implementing the backend services, checking everything was working correctly, testing emails and images, and then finally deciding on and testing a backup solution.

As Backblaze has served us so well for images, our solution will back everything on the server up during the night (UTC) and then securely store it in a private Backblaze B2 bucket, with a full versioning solution for suitable daily, weekly, monthly and yearly backups. Good stuff. I do need to test this soon though to make sure it works on a restore. Will probably spin up a Hetzner cloud server and try it out.

I am happy to expand on this process if anyone is interested, just ask away in the comments.

Interaction reminder (help support the instance!)

If you’re new to Lemmy.zip - WELCOME! I hope you’re enjoying your time here :)

I just want to let everyone know that the easiest way you can support Lemmy.zip is to actively engage with content here: upvoting content you enjoy, sharing your thoughts through posts and comments, sparking meaningful discussions, or even creating new communities that resonate with your interests.

It’s natural to see fluctuations in user activity over time, and we’ve seen this over the wider lemmy-verse for some time now. However, if you’ve found a home here and love this space, now is the perfect opportunity to help us thrive.

If you want to support us in a different way than financially, then actively interacting with the instance helps us out loads.

Donations

Lemmy.zip only continues to exist because of the generous donations of its users. The operating cost of Lemmy.zip has now tipped over 50 euros a month ($54, £43) and that’s not including the surrounding services (email, URL, hosting the seperate status server - which I am covering myself).

We keep all the details around donations on our OpenCollective page, with full transparency around income and expenditure.

If you’re enjoying Lemmy.zip, please check out the OpenCollective page, we have a selection of one-off or recurring donation options. All funds go directly to hosting the site and keeping the virtual lights on.

We continue to have some really kind and generous donators and I can’t express my thanks enough. You can see all the kind donators in the Thank You thread - you could get your name in there too!

I am also looking at future fundraiser ideas (fundraiser committee anyone!) - if you have any ideas please let me know either in the comments, via PM, or on Matrix.

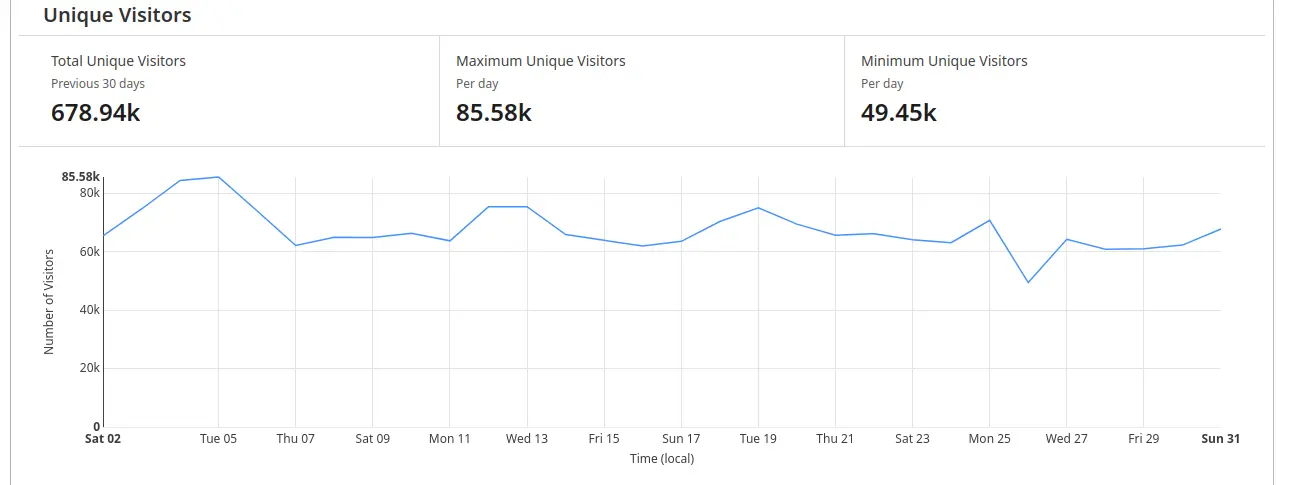

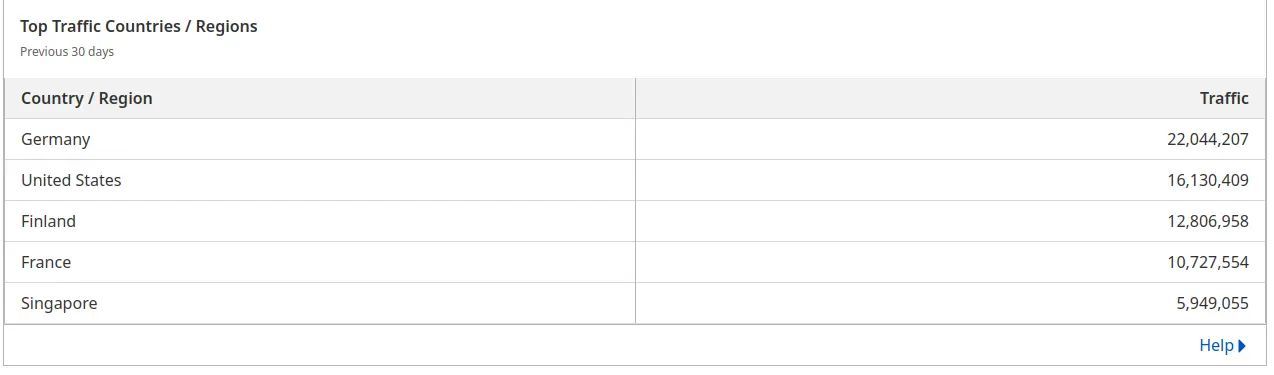

Graph time!

This was the monthly performance of the Old server before it was turned off:

You can see the demand really start to ramp up towards the end of the month.

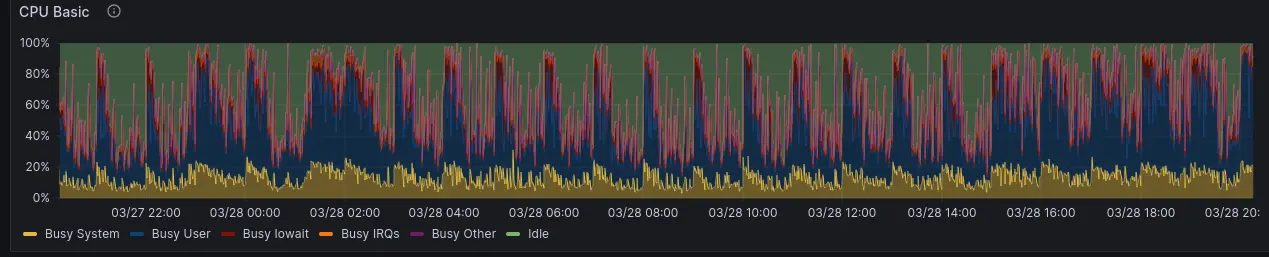

This was the 24 hour performance on the 28th:

The hourly peaks were where the performance really dropped out for about 20 minutes, followed by 40 minutes of still pretty poor performance.

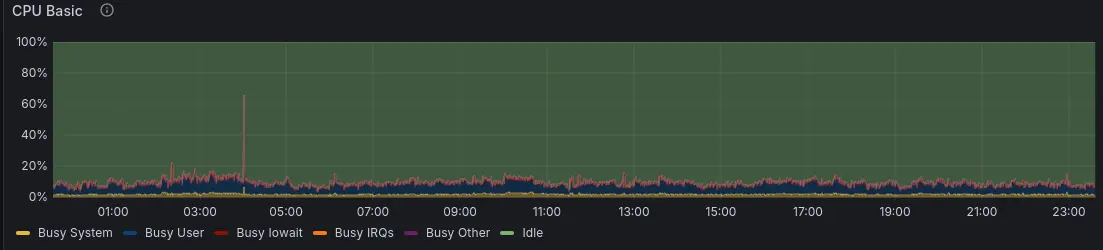

This is the performance of the new server of the last 24 hours:

Just a little bit of a difference :)

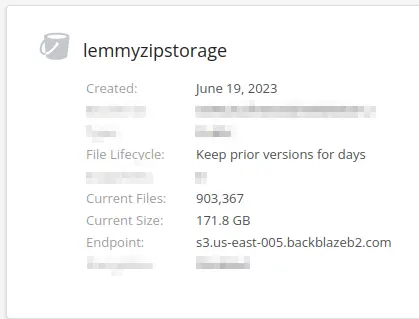

Our current image hosting stats:

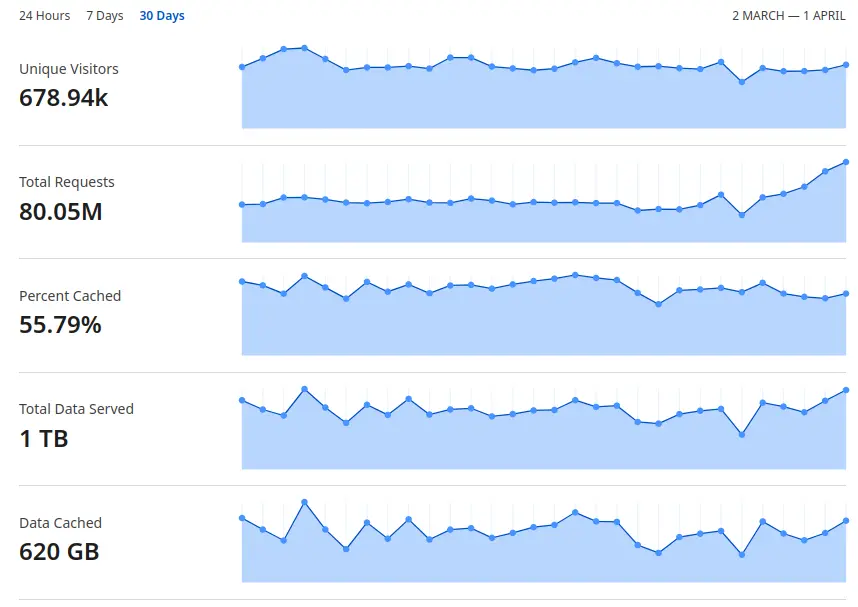

This is our Cloudflare overview for the last month:

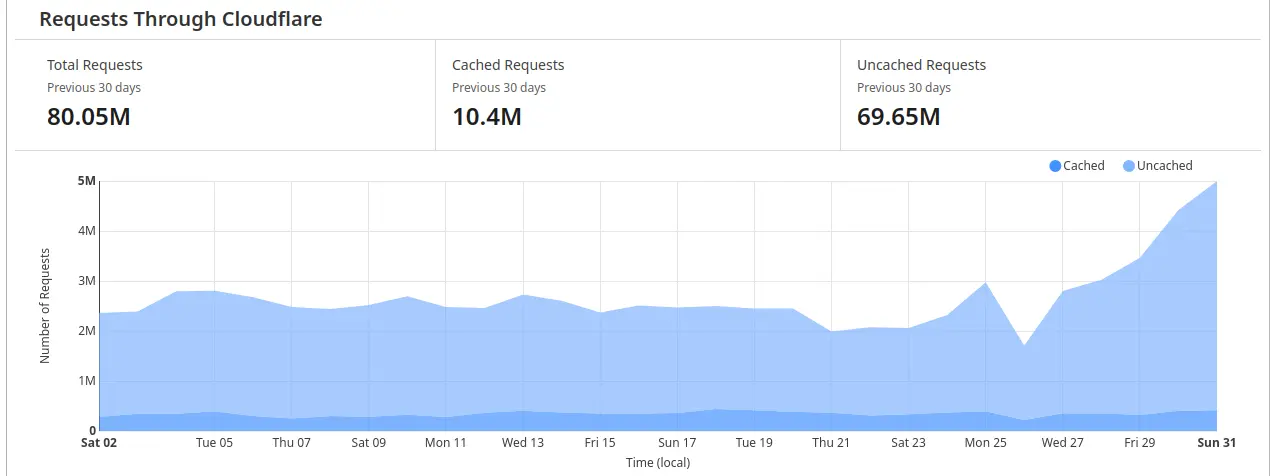

Cloudflare requests:

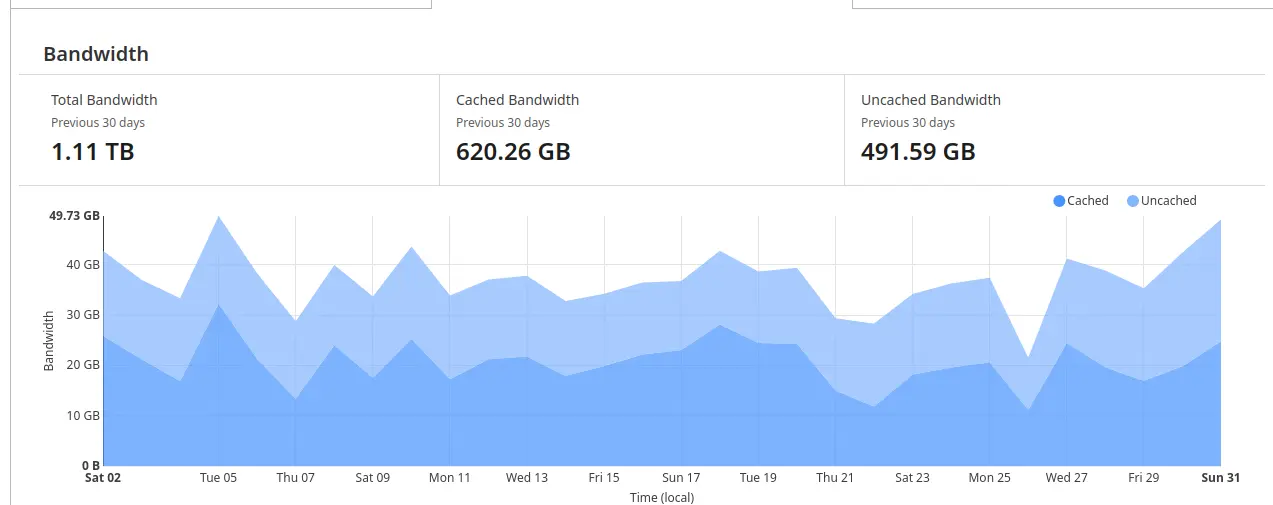

Cloudflare bandwidth:

Cloudflare unique visitors:

Cloudflare traffic:

And that’s pretty much it. As always, if there’s anything you’d like more information on, or you’d like to see included in these regularly, let me know.

Cheers,

Demigodrick

Thank you for all the hard work and time you put into this community!