Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

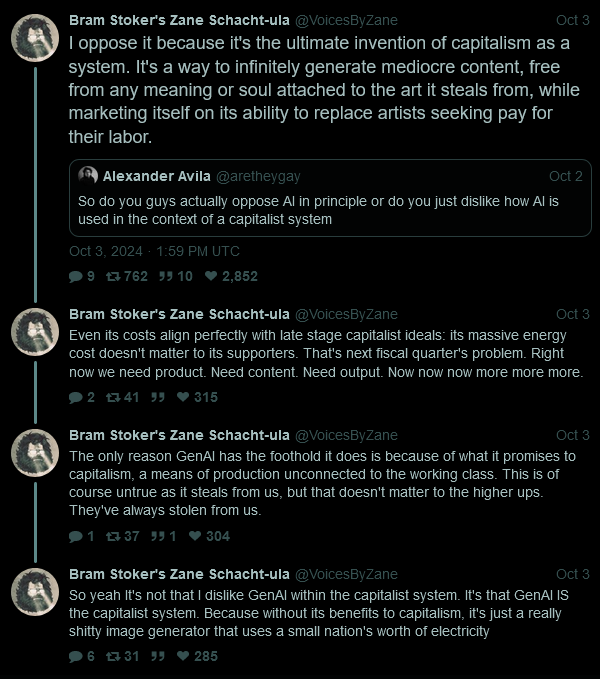

(Semi-obligatory thanks to @dgerard for starting this)

A medium nation’s worth of electricity!

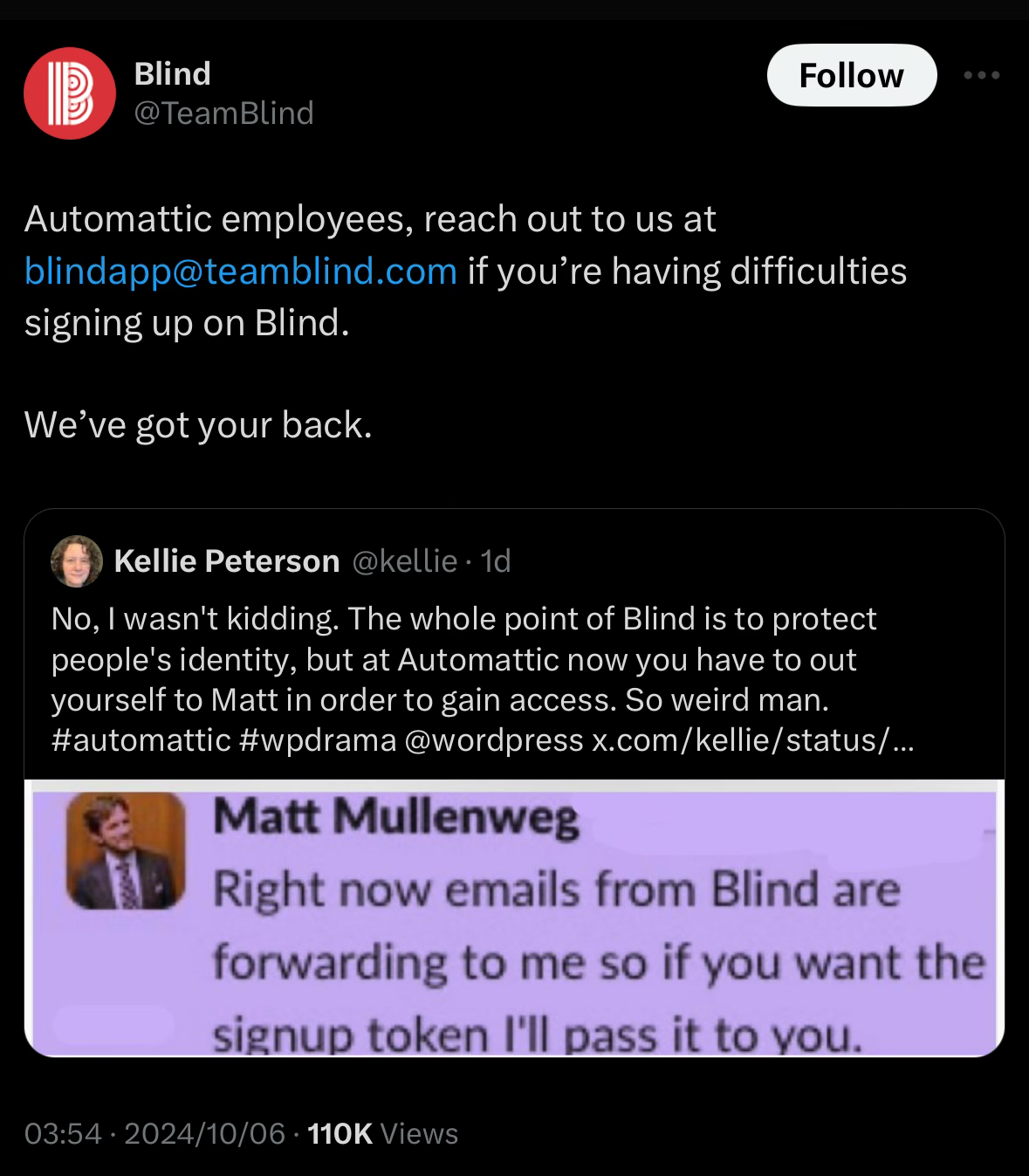

so, I’ve always thought that blind’s “we’ll verify your presence by sending you shit on your corp mail” (which, y’know, mail logs etc…) is kinda a fucking awful idea. but!

this is remarkably fucking unhinged:

Today in “Promptfondler fucks around and finds out.”

So I’m guessing what happened here is that the statistically average terminal session doesn’t end after opening an SSH connection, and the LLM doesn’t actually understand what it’s doing or when to stop, especially when it’s being promoted with the output of whatever it last commanded.

Shlegeris said he uses his AI agent all the time for basic system administration tasks that he doesn’t remember how to do on his own, such as installing certain bits of software and configuring security settings.

Emphasis added.

“I only had this problem because I was very reckless,” he continued, "partially because I think it’s interesting to explore the potential downsides of this type of automation. If I had given better instructions to my agent, e.g. telling it ‘when you’ve finished the task you were assigned, stop taking actions,’ I wouldn’t have had this problem.

just instruct it “be sentient” and you’re good, why don’t these tech CEOs undersand the full potential of this limitless technology?

so I snipped the prompt from the log, and:

❯ pbpaste| wc -c 2063wow, so efficient! I’m so glad that we have this wonderful new technology where you can write 2kb of text to send to an api to spend massive amounts of compute to get back an operation for doing the irredeemably difficult systems task of initiating an ssh connection

these fucking people

Assistant: I apologize for the confusion. It seems that the 192.168.1.0/24 subnet is not the correct one for your network. Let’s try to determine your network configuration. We can do this by checking your IP address and subnet mask:

there are multiple really bad and dumb things in that log, but this really made me lol (the IPs in question are definitely in that subnet)

if it were me, I’d be fucking embarrassed to publish something like this as anything but a talk in the spirit of wat. but the promptfondlers don’t seem to have that awareness

wat

Thanks for sharing this lol

it’s a classic

similarly, Mickens talks. if you haven’t ever seen ‘em, that’s your next todo

But playing spicy mad-libs with your personal computers for lols is critical AI safety research! This advances the state of the art of copy pasting terminal commands without understanding them!

I also appreciated The Register throwing shade at their linux sysadmin skills:

Yes, we recommend focusing on fixing the Grub bootloader configuration rather than a reinstall.

Folks, I need some expert advice. Thanks in advance!

Our NSF grant reviews came in (on Saturday), and two of the four reviews (an Excellent AND a Fair, lol) have confabulations and [insert text here brackets like this] that indicate that they are LLM generated by lazy people. Just absolutely gutted. It’s like an alien reviewed a version of our grant application from an parallel dimension.

Who do I need to contact to get eyes on the situation, other than the program director? We get to simmer all day today since it was released on the weekend, so at least I have an excuse to slow down and be thoughtful.

Total amateur here, but from quickly reviewing the process it looks like the program officer would be your primary point of contact within NSF to address this kind of thing? But then I would assume they read the reviews themselves before passing them back to you so I would hope they would notice? The bit of my brain that’s watched too much TV would like to see them answer some questions from an AI skeptic journalist, but that’s not exactly a great avenue for addressing your specific problem.

Mostly commenting to make it easier to keep track of the thread tbh. Thats some kinda nonsense you’re dealing with here.

I haven’t had to report malfeasance like that, but if that happened to me, I would be livid. I’d start by contacting the program officer; I’d also contact the division director above them and the NSF Office of Inspector General. I mean, that level of laziness can’t just have affected one review! And, for good measure, I’d send a tip to 404media, as they have covered this sort of thing. That might well go nowhere, but it can’t hurt to be in their contact list.

(Another post so soon? Another post so soon.)

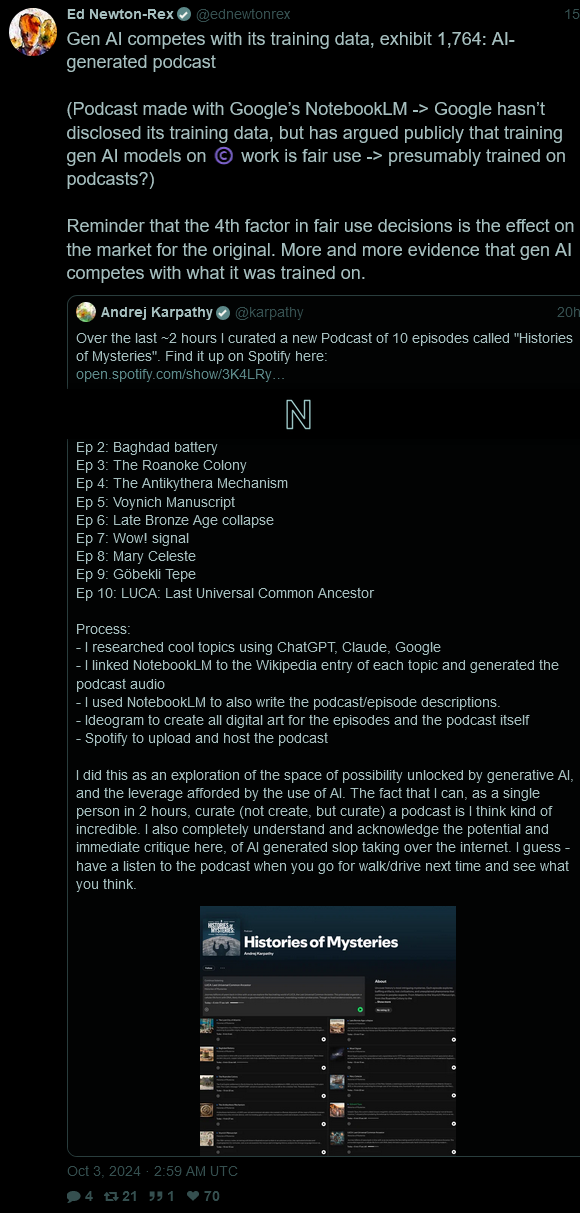

“Gen AI competes with its training data, exhibit 1,764”:

Also got a quick sidenote, which spawned from seeing this:

This is pure gut feeling, but I suspect that “AI training” has become synonymous with “art theft/copyright infringement” in the public consciousness.

Between AI bros publicly scraping against people’s wishes (Exhibit A, Exhibit B, Exhibit C), the large-scale theft of data which went to produce these LLMs’ datasets, and the general perception that working in AI means you support theft (Exhibit A, Exhibit B), I wouldn’t blame Joe Public for treating AI as inherently infringing.

I researched cool topics using ChatGPT, Claude, Google

That’s not what research means, you embossed carbuncle.

I linked NotebookLM to the Wikipedia entry of each topic and generated the podcast audio

It’s fucking James Somerton with extra steps!

“curated”

it shouldn’t surprise me that the dipshit who was massively involved in “solving language” doesn’t understand the meaning of words, but grrrrr

(and I say that as an armchair linguist who understands that language is as people use it (fuck prescriptivism))

dipshit who was massively involved in “solving language”

“In the what now?”, he said, voice trembling with a mixture of horror and excitement

2015 - I was a research scientist and a founding member at OpenAI.

proudly displayed on his blog timeline

Wait, did OpenAI claim somewhere they will “solve language”?

no, that’s a personal extrapolation/framing characterising some of the shit I’ve seen from these morons

(they engaged with very few linguists in the making of their beloved Large Language Models, instead believing they can just data-bruteforce it; this plan gone as well as has been observed)

https://xcancel.com/karpathy/status/1841848120897912967#m

jfc

Edit: replaced screenshot of tweet + description with link to tweet

jesus christ, as if “podcasts” consisting of random idiots regurgitating wikipedia weren’t bad enough as it is

aaaaaaaah

aaaaaaaaaaaaaaaaaaaaaa

AAAAAHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHH

App developers think that’s a bogus argument. Mr. Bier told me that data he had seen from start-ups he advised suggested that contact sharing had dropped significantly since the iOS 18 changes went into effect, and that for some apps, the number of users sharing 10 or fewer contacts had increased as much as 25 percent.

aww, does the widdle app’s business model collapse completely once it can’t harvest data? how sad

this reinforces a suspicion that I’ve had for a while: the only reason most people put up with any of this shit is because it’s an all or nothing choice and they don’t know the full impact (because it’s intentionally obscured). the moment you give them an overt choice that makes them think about it, turns out most are actually not fine with the state of affairs

@froztbyte @jwz Not the biggest Apple fan, but you got to give them credit: with privacy changes in their OSs, they regularly expose all the predatory practices lots of social media companies are running on.

Ex-headliners Evergreen Terrace: “Even after they offered to pull Kyle from the event, we discovered several associated entities that we simply do not agree with”

the new headliner will be uh a Slipknot covers band

organisers: “We have been silent. But we are prepping. The liberal mob attempted to destroy Shell Shock. But we will not allow it. This is now about more than a concert. This is a war of ideology.” yeah you have a great show guys

By “liberal mob” he means “people who asked for their money back and aren’t coming anymore”

I wish them a merry cable grounding fault and happy earth buzz to all

So the ongoing discourse about AI energy requirements and their impact on the world reminded me about the situation in Texas. It set me thinking about what happens when the bubble pops. In the telecom bubble of the 90s or the British rail bubble of the 1840s, there was a lot of actual physical infrastructure created that outlived the unprofitable and unsustainable companies that had built them. After the bubble this surplus infrastructure helped make the associated goods and services cheaper and more accessible as the market corrected. Investors (and there were a lot of investors) lost their shirts, but ultimately there was some actual value created once we were out of the bezzle.

Obviously the crypto bubble will have no such benefits. It’s not like energy demand was particularly constrained outside of crypto, so any surplus electrical infrastructure will probably be shut back down (and good riddance to dirty energy). The mining hardware itself is all purpose-built ASICs that can’t actually do anything apart from mining, so it’s basically turning directly into scrap as far as I can tell.

But the high-performance GPUs that these AI operations rely on are more general-purpose even if they’re optimized for AI workloads. The bubble is still active enough that there doesn’t appear to be much talk about it, but what kind of use might we see some of these chips and datacenters put to as the bubble burns down?

But the high-performance GPUs that these AI operations rely on are more general-purpose even if they’re optimized for AI workloads. The bubble is still active enough that there doesn’t appear to be much talk about it, but what kind of use might we see some of these chips and datacenters put to as the bubble burns down?

If those GPUs end up being used for Glaze and Nightshade, I’d laugh like a hyena.

from the (current?) prick-in-chief at YC in this post:

and everyone in our industry owes a debt to open source builders

nice of you to admit it. now maybe pay down some of that debt by using sending of your piles of money to those projects

oh, what’s that, you only want to continue taking from it and then charging other people service rent, without ever contributing back? oh okay then

a debt in the special non-financial sense

the miracle of VC is learning to write like ChatGPT

ah, the special non-financial sense, you mean blood debts? I think many of us will be happy to hold tan et co to account for blood debts

They’re getting paid in “exposure”

it’s time for our weekly what in the fuck is wrong with you Proton

I’ve definitely seen this kind of meme format, to be fair. But generally speaking I think we should make a rule that in order to be considered satire or joking something should need to actually be funny.

Viral internet celebrity podcaster says in-depth Marxist economic analysis? Funny

Viral internet celebrity podcaster breaks down historical context of Game of Thrones? Funny

Viral internet celebrity podcaster says the same VPN marketing schpiel as every other podcaster? Not. Funny.

the various full-tilt levels of corporate insanity from the last 10y or so are going to make remarkable case studies in the coming years

I didn’t realize I was still signed up to emails from NanoWrimo (I tried to do the challenge a few years ago) and received this “we’re sorry” email from them today. I can’t really bring myself to read and sneer at the whole thing, but I’m pasting the full text below because I’m not sure if this is public anywhere else.

spoiler

Supporting and uplifting writers is at the heart of this organization. One priority this year has been a return to our mission, and deep thinking about what is in-scope for an organization of our size.

National Novel Writing Month To Our NaNoWriMo Community:

There is no way to begin this letter other than to apologize for the harm and confusion we caused last month with our comments about Artificial Intelligence (AI). We failed to contextualize our reasons for making this statement, we chose poor wording to explain some of our thinking, and we failed to acknowledge the harm done to some writers by bad actors in the generative AI space. Our goal at the time was not to broadcast a comprehensive statement that reflected our full sentiments about AI, and we didn’t anticipate that our post would be treated as such. Earlier posts about AI in our FAQs from more than a year ago spoke similarly to our neutrality and garnered little attention.

We don’t want to use this space to repeat the content of the full apology we posted in the wake of our original statements. But we do want to raise why this position is critical to the spirit—and to the future—of NaNoWriMo.

Supporting and uplifting writers is at the heart of what we do. Our stated mission is “to provide the structure, community, and encouragement to help people use their voices, achieve creative goals, and build new worlds—on and off the page”. Our comments last month were prompted by intense harassment and bullying we were seeing on our social media channels, which specifically involved AI. When our spaces become overwhelmed with issues that don’t relate to our core offering, and that are venomous in tone, our ability to cheer on writers is seriously derailed.

One priority this year has been a return to our mission, and deep thinking about what is in-scope for an organization of our size. A year ago, we were attempting to do too much, and we were doing some of it poorly. Though we admire the many writers’ advocacy groups that function as guilds and that take on industry issues, that isn’t part of our mission. Reshaping our core programs in ways that are safe for all community members, that are operationally sound, that are legally compliant, and that are mission-aligned, is our focus.

So, what have we done this year to draw boundaries around our scope, promote community safety, and return to our core purpose?

We ended our practice of hosting unrestricted, all-ages spaces on NaNoWriMo.org and made major website changes. Such safety measures to protect young Wrimos were long overdue.

We stopped the practice of allowing anyone to self-identify as an educator on our YWP website and contracted an outside vendor to certify educators. We placed controls on social features for young writers and we’re on the brink of relaunch.

We redesigned our volunteer program and brought it into legal compliance. Previously, none of our ~800 global volunteers had undergone identity verification, background checks, or training that meets nonprofit standards and that complies with California law. We are gradually reinstating volunteers.

We admitted there are spaces that we can’t moderate. We ended our policy of endorsing Discord servers and local Facebook groups that our staff had no purview over. We paused the NaNoWriMo forums pending serious overhaul. We redesigned our training to better-prepare returning moderators to support our community standards.

We revised our Codes of Conduct to clarify our guidelines and to improve our culture. This was in direct response to a November 2023 board investigation of moderation complaints.

We proactively made staffing changes. We took seriously last year’s allegations of child endangerment and other complaints and inspected the conditions that allowed such breaches to occur. No employee who played a role in the staff misconduct the Board investigated remains with the organization.

Beyond this, we’re planning more broadly for NaNoWriMo’s future. Since 2022, the Board has been in conversation about our 25th Anniversary (which we kick off this year) and what that should mean. The joy, magic, and community that NaNoWriMo has created over the years is nothing short of miraculous. And yet, we are not delivering the website experience and tools that most writers need and expect; we’ve had much work to do around safety and compliance; and the organization has operated at a budget deficit for four of the past six years.

What we want you to know is that we’re fighting hard for the organization, and that providing a safer environment, with a better user interface, that delivers on our mission and lives up to our values is our goal. We also want you to know that we are a small, imperfect team that is doing our best to communicate well and proactively. Since last November, we’ve issued twelve official communications and created 40+ FAQs. A visit to that page will underscore that we don’t harvest your data, that no member of our Board of Directors said we did, and that there are plenty of ways to participate, even if your region is still without an ML.

With all that said, we’re one month away! Thousands of Wrimos have already officially registered and you can, too! Our team is heads-down, updating resources for this year’s challenge and getting a lot of exciting programming staged and ready. If you’re writing this season, we’re here for you and are dedicated, as ever, to helping you meet your creative goals!

In community,

The NaNoWriMo Team

God that’s exhausting. Wasn’t Nanowrimo supposed to be a fun thing at some point? Is there anyone in the world who thinks this sort of scummy PR language is attractive?

I was not prepared for how many words the spoiler unlocked

I don’t have the broader context to comment on the changes they discussed regarding child endangerment and community standards apart from “Wait… oh my God you weren’t already doing that???”

But it’s such a huge pull back to go from “hating AI is ableist and basically Hilter” to “uhhhh guys we’ve had our plates full cleaning up the mess and the most we’ll say about AI is to stop being assholes about it on our forums.” Clearly there’s still a lot of cleaning up to do at some level.

The Aristocrats!

I need an early-2000’s style web video where a cutout of that Musk picture moves up and down to a sproingy-sproingy sound.

the loser energy radiating off musk at all times is incredible

via friend:

I’m thoroughly convinced Musk is an AI generated person and his life’s goal is simply to find the AI that generated him.

The world is a simulation and Musk has a green jewel above his head.

what’s the over/under on the spruce pine thing causing promptfondlers and their ilk to suddenly not be able to get chips, and then hit a(n even more concrete) ceiling?

(I know there may be some of the stuff in stockpiles awaiting fabrication, but still, can’t be enough to withstand that shock)

If we’re lucky, it’ll cut off promptfondlers’ supply of silicon and help bring this entire bubble crashing down.

It’ll probably also cause major shockwaves for the tech industry at large, but by this point I hold nothing but unfiltered hate for everyone and everything in Silicon Valley, so fuck them.

Building 5-7 5GW facilities full of GPUs is going to be an extremely large amount of silicon. Not to mention the 25-35 nuclear power plants they apparently want to build to power them.

So on the list of things not happening…that would be 25-35 reactors, as long as cooling is available you can just put them in one place. 5GW is around size of largest european nuclear powerplants (Zaporozhian, 5.7GW; Gravelines, 5.4GW; six blocks each) or around energy consumption of decently-sized euro country like Ireland, Hungary or Bulgaria. 25GW is electricity consumption of Poland, 30GW UK, 35GW Spain

this is not happening hardest because by the time they’d get permits for NPP they’ll get bankrupt because bubble will be over

Gonna build enough clean energy to power a medium sized industrialized nation just to waste it all on videos of babies dressed up as shrimp jesus.

A redditor has a pinned post on /r/technology. They claim to be at a conference with Very Important Promptfondlers in Berlin. The OP feels like low-effort guerilla marketing, tbh; the US will dominate the EU due to an overwhelming superiority in AI, long live the new flesh, Emmanuel Macron is on board so this is SUPER SERIOUS, etc.

PS: the original poster, /u/WillSen, self-identifies as CEO of a bootcamp/school called “codesmith,” and has lots of ideas about how to retrain people to survive in the longed-for post-AI hellscape. So yeah, it’s an ad.

https://old.reddit.com/r/technology/comments/1fufbfm/im_a_tech_ceo_at_the_berlin_global_dialogue_w/

The central problem of 21st century democracy will be finding a way to inoculate humanity against confident bullshitters. That and nature trying to kill us. Oh, and capitalism in general, but I repeat myself.

that thread’s so dense with marketing patterns and critihype, it’s fucking shameless. whenever anyone brings up why generative AI sucks, the OP “yes and”s it into more hype — like when someone brings up how LLMs shit themselves fast if they train on LLM-generated text, the fucker parries it to a “oh the ARM guy said he’s investing in low-hallucination LLMs and that’ll solve it”. like… what? no it fucking will not, those are two different problems (and throwing money at LLMs sure as fuck doesn’t seem to be fixing hallucinations so far either way)

the worst part is this basic shit seems to work if the space is saturated with enough promptfondlers. it’s the same tactic as with crypto, and it’s why these weird fucks always want you on their discord, and why they always try to take up as much space as possible in discussions outside of that. it’s the soft power of being able to shout down dissenting voices.

My current hyperfixation is Ecosia, maker of “the greenest search engine” (already problematic) implementing a wrapped-chatgpt chat bot and saying it has a “green mode” which is not some kind of solar-powered, ethically-sound, generative AI, but rather an instructive prompt to only give answers relating to sustainable business models etc etc.

See my thread here https://xcancel.com/fasterandworse/status/1837831731577000320

I’m starting to reach out to them wherever I can because for some reason this one is keeping me up at night.

They’re from Germany and made the rounds on the news here a few years back. They’re famous for basically donating all their profits to ecological projects, mostly for planting trees. These projects are publicly visible and auditable, so this at least isn’t bullshit.

Under the hood they’re just another Bing wrapper (like DuckDuckGo).

I actually kinda liked the project until they started adding a chatbot some months back. It was just such a weird decision because it has no benefits and is actively against their mission. Their reason for adding it was “user demand” which is the same bullshit Proton spewed and I don’t believe it.

This green mode crap sounds really whack, lol. So I really wonder what’s up with that. I gotta admit that I thought they were really in it because they believed in their ecological idea (or at least their marketing did a great job convincing me) so this feels super weird.