Welcome to 2024! I hope everyone had a nice and enjoyable festive period, and I’m excited to see what the next 12 months holds for us all!

Here is the monthly update on everything happening with Lemmy.zip.

New Communities

Server Updates

So quite a lot has happened since the December Update including the release of Lemmy 0.19 and 0.19.1, troubles with Lemmy federation across the network, a change to our hardware, and some moderator-friendly additions to Zippybot.

- Lemmy 0.19 Release

On the 16th December we took the server briefly offline in order to apply the 0.19 update, which took about 20 minutes to do some database migrations and then all came back online quite smoothly. We also took the opportunity to update the server OS and all of the supporting services to their latest versions too, which all in all took about 2 hours, most of which was waiting for various backups to complete. We have updated Pictrs (the software which shows you the images!) to its latest non-beta version, but there is a new update coming for Pictrs which requires some downtime to allow for prep work for this update to take place (although core Lemmy will still work). We’ll post more on this when it happens.

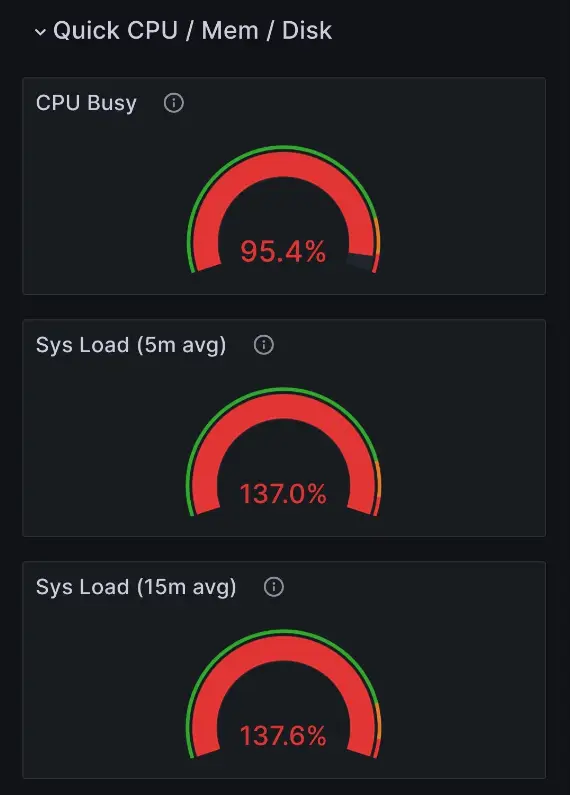

It was quickly discovered we had quite a big issue (well, technically two but we worked through the second one which was caused by docker-specific settings which I’m happy to expand on if anyone is interested) which was that suddenly our CPU was maxing out, our RAM was maxing out, and our site was grinding to a halt. Something has changed, most likely due to the new persistent federation queue, which is causing the database to continuously eat server resources. Here’s a lovely screenshot showing how the server was maxing out during my “wtf is happening” phase that I posted to our matrix chat:

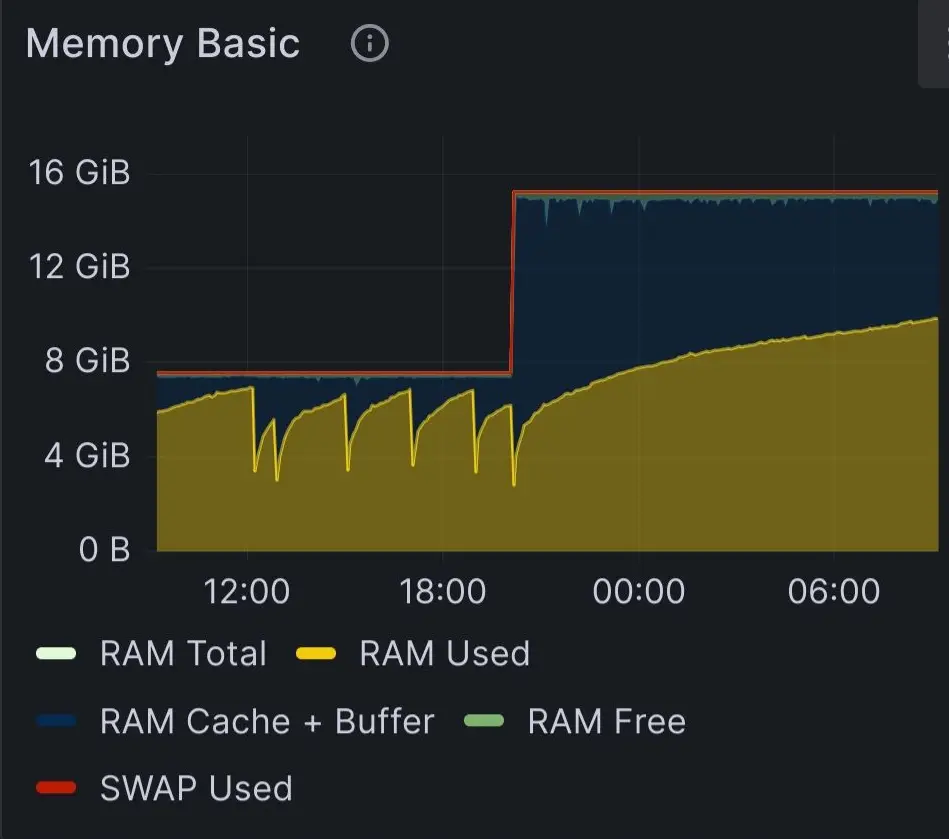

Due to this, I’ve finally had to admit defeat and move us off a VPS to a Dedicated Server (technically dedicated vCPUs) which has kind of sorted our performance issues, by delaying the restart of the database from every hour and half to once a day.

This graph shows the before and after of our RAM usage. The left side shows the RAM being maxed out by the database and then the system killing and restarting the database in a vicious cycle (with our measly 8gb of RAM and the right hand side shows our new RAM usage - its still going up, but with 16GBs the cycle only occurs once a day, and for a second or two at most. Unfortunately this is a bug I can’t fix, and isn’t replicated by all Instances apparently (although I have seen a fair few other admins mention it) so hopefully it can be fixed at some point.

There is also a bug in relation to federation which due to all the DB restarts we weren’t actually facing until we had a bit more power, when suddenly the server stopped federating with lots of other instances. There is a really handy public tool where you can see all this info live - It’s important to note that the failing instances numbers are fine, if you try to visit them you’ll probably get an error. The dead instances are instances our server hasn’t been able to communicate with in 3 days, and so has stopped trying. Feel free to check that list and if you think any shouldn’t be on there, let me know and I can manually reset them.

The issue lies with the “lagging” instances - we suddenly saw a thousand instances on that list, including some of the biggest instances, which indicated an issue. Its normal to see one or two on there, but not a thousand. Thankfully, a database restart does fix this issue so I am manually checking this website once or twice a day and will restart it if necessary, but if you do notice any issues ping me a message and I can take a look. In the future I pan to automate this but have not yet fully recovered from the festivities!

Lemmy 0.19.1 was also released, fixing some bugs such as the “show read posts” option not working, which was quietly applied.

- ZippyBot Moderator updates

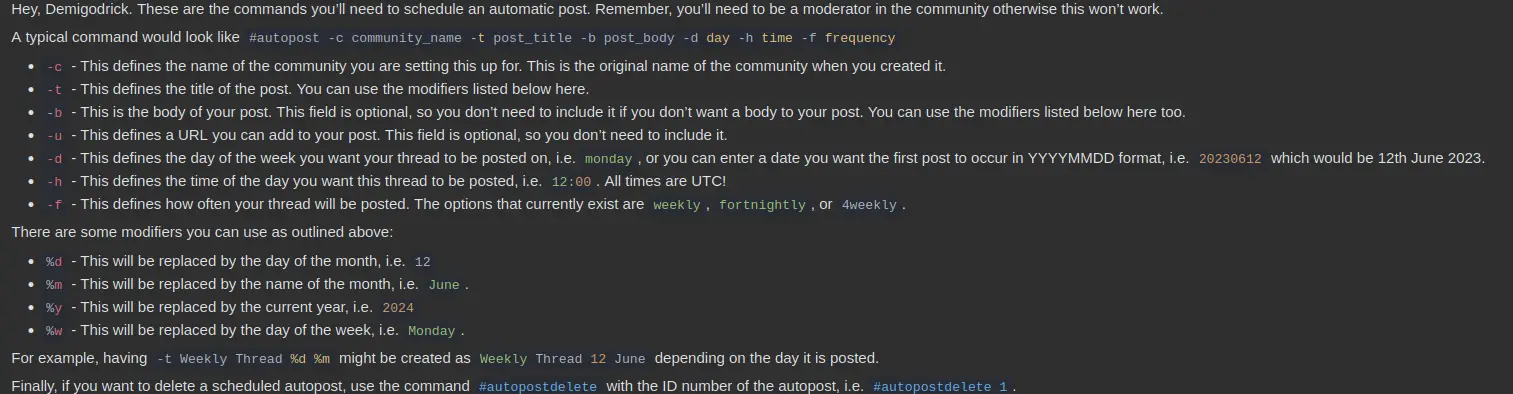

Work continues to build some form of serviceable “automod” for moderators, so Zippy now includes the functionality for community mods to schedule posts to their communities, either on a one-off or ongoing basis.

The command for this is #autopost followed by some qualifiers - you can get the full info by sending Zippy a message with the command #autoposthelp, which will send you back something like this:

Updates will continue to happen to this for added functionality and I’m always open to ideas.

Donations

This is probably a more important topic than it previously has been - due to the extreme generosity of some of our users, we’ve been in a financial position that has allowed us to not need to worry about funding too much. However, with the move from a VPS to a dedicated server, our costs have almost doubled from £16.36ish a month to £30 a month. This means, assuming nobody donated another penny, we’d run out of funds in less than 8 months. Our current level of donations is just short of this new monthly figure too, which does mean we will eventually run out of funds at some point too even if nothing changed with donations.

Therefore, my ask is that if you’ve been enjoying your time here, please consider a donation - it will help keep Lemmy.zip online and all donations go to paying for the server and for the surrounding services like image hosting and email.

Interaction reminder (help support the instance!)

If you’re new to Lemmy.zip - WELCOME! I hope you’re enjoying your time here :)

I wanted to take a moment to encourage everyone to actively engage with our platform. Your participation can take many forms: upvoting content you enjoy, sharing your thoughts through posts and comments, sparking meaningful discussions, or even creating new communities that resonate with your interests.

It’s natural to see fluctuations in user activity over time, and we’ve seen this over the wider lemmy-verse for some time now. However, if you’ve found a home here and love this space, now is the perfect opportunity to help us thrive.

If you want to support us in a different way than financially, then actively interacting with the instance helps us out loads.

Server Performance

Graph time!

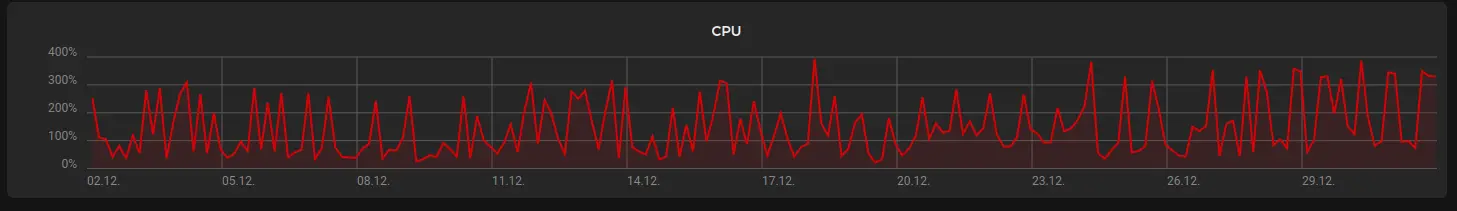

CPU usage over last 30 days:

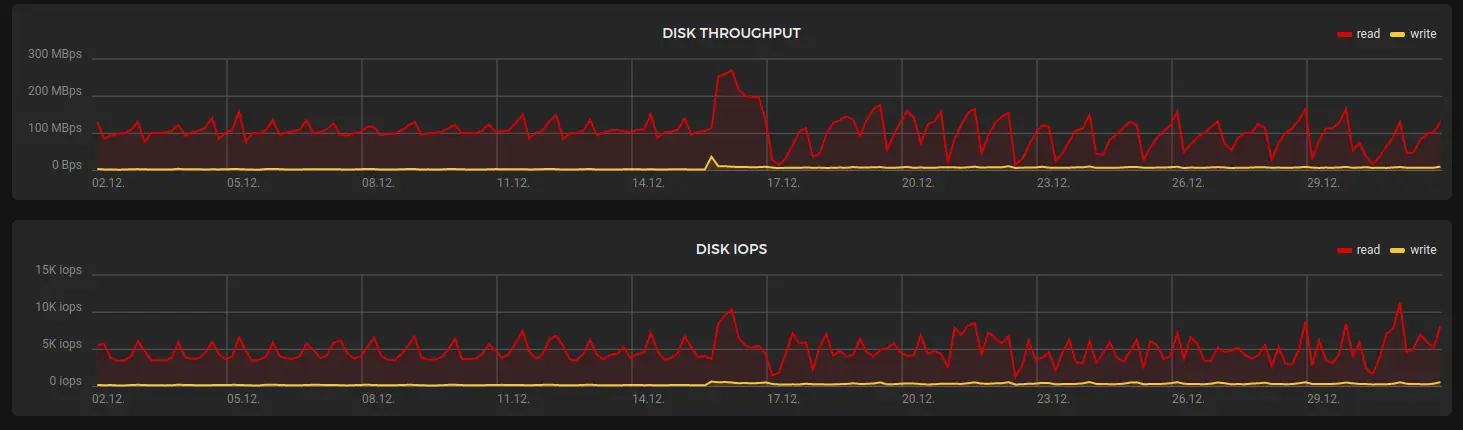

Disk usage over 30 days:

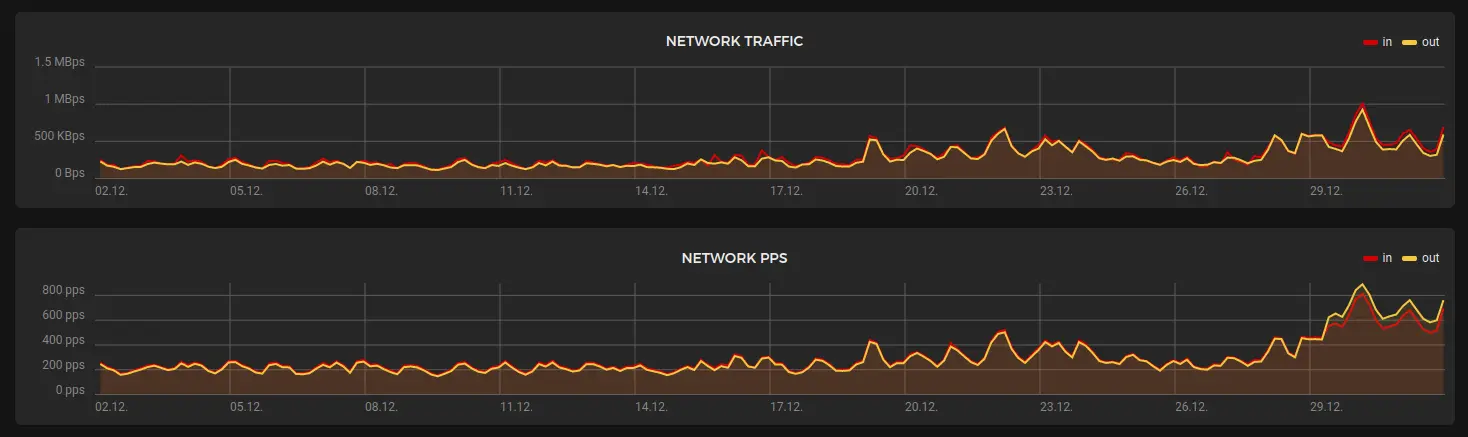

Network usage:

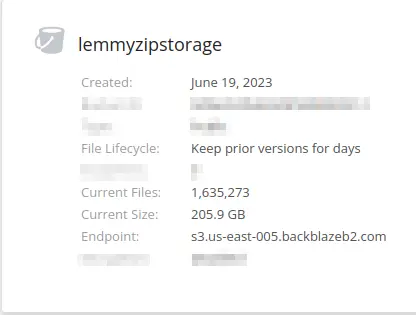

Image hosting stats: (over 200gb now!)

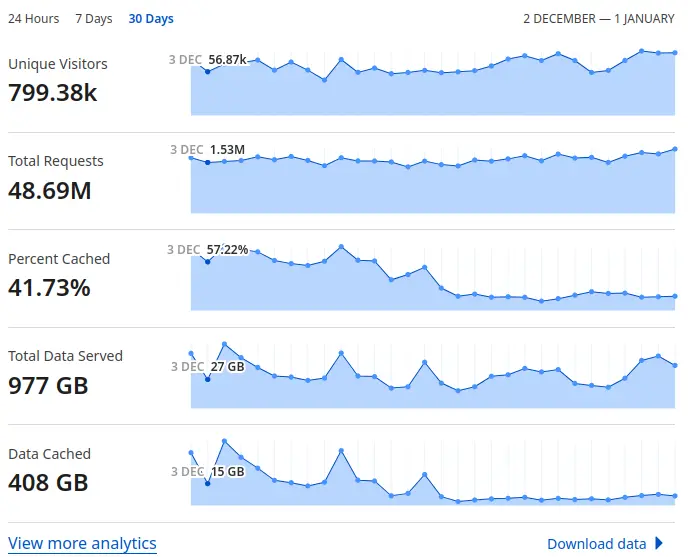

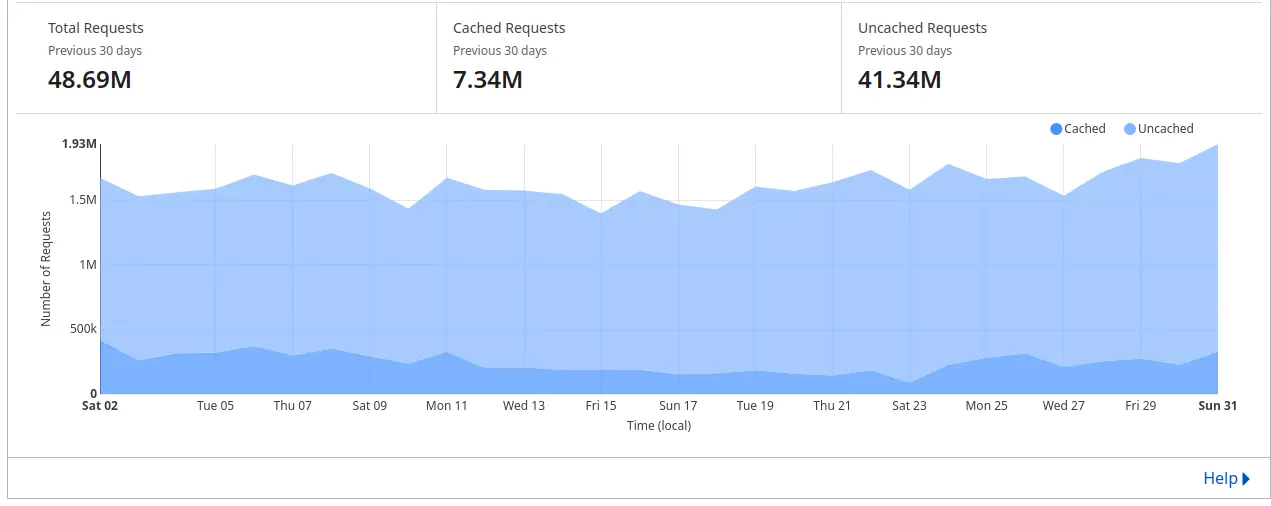

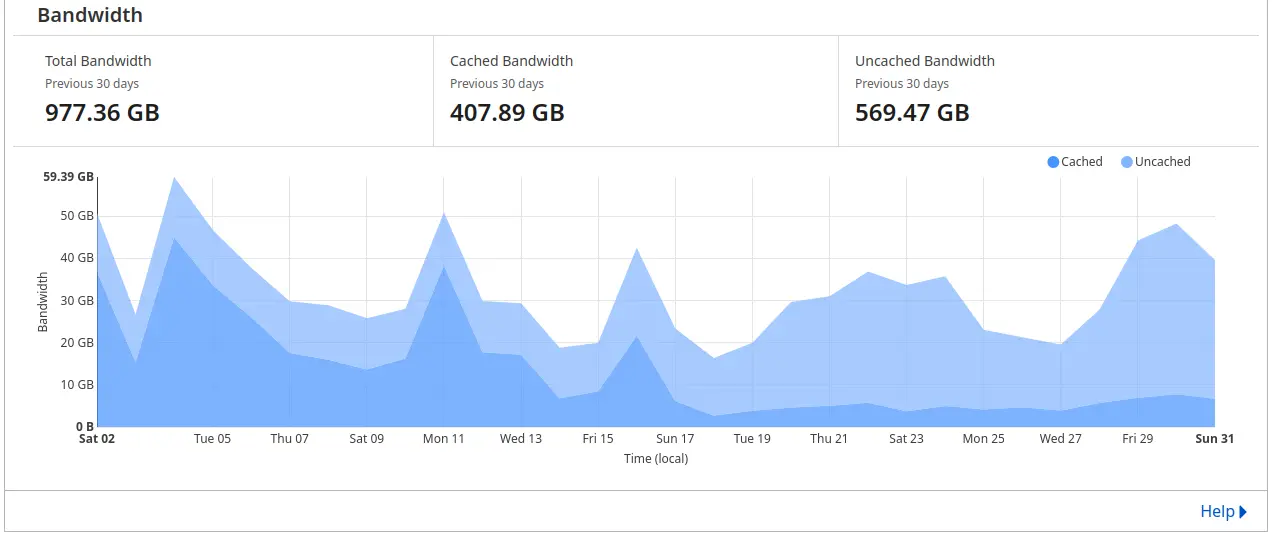

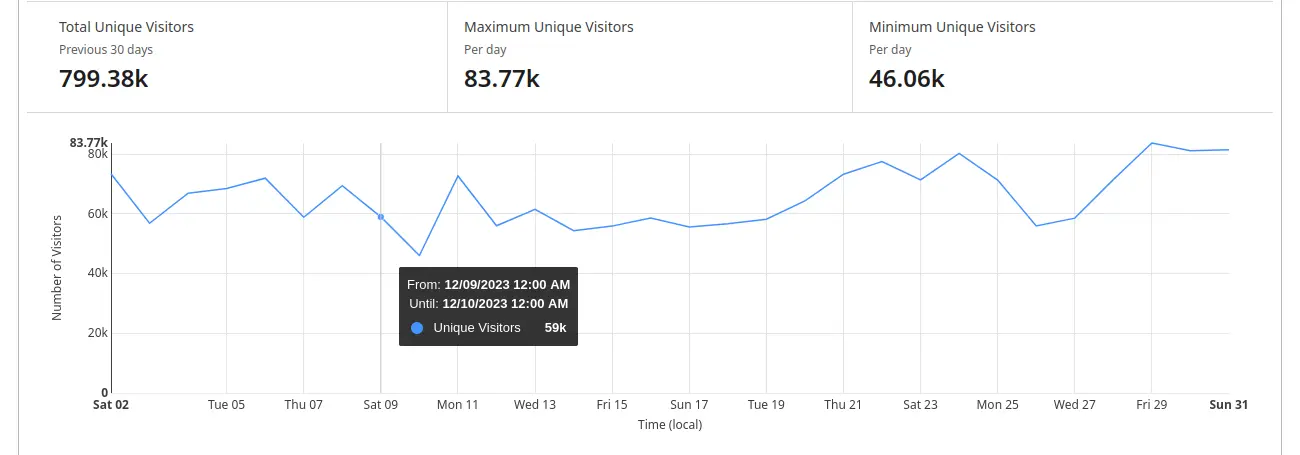

Cloudflare stats:

Deeper dive into those metrics:

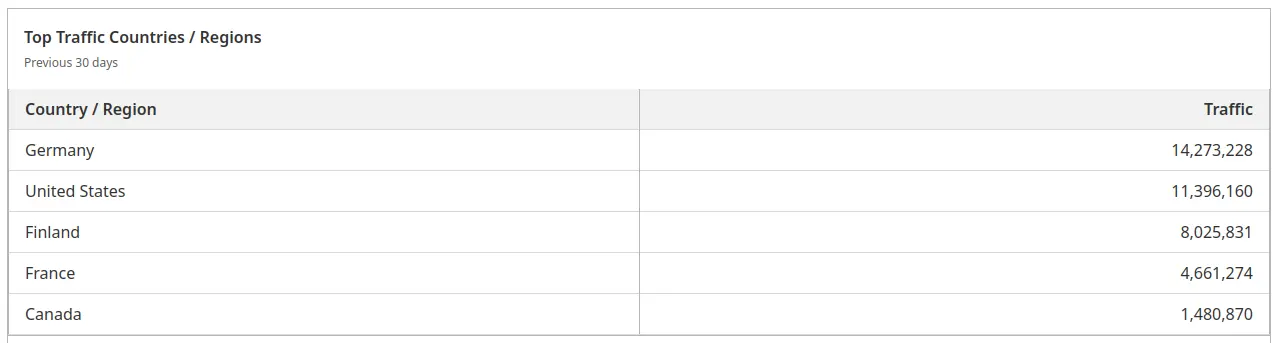

And finally traffic stats from around the world:

As always, if there are any other metrics you’d like to see let me know!

Otherwise thats it for this month, thank you all for being here :)

Demigodrick

Awesome write up. Thanks for all you do.

Happy New Year!

I suppose there is a reason why some instances wait so long to update. It sounds like this update has been really bumpy. One question though, is there a reason Lemmy is now so much more resource intensive? It seems kind of odd to start taking way more resources. Maybe there is a benefit I’m missing?

As far as donating goes I would be happy to pitch in a bit to keep us afloat. Is there a financial goal you are targeting?

I think the general consesus is that its to do with the new peristent federation queue, i.e. if the server goes down, it won’t lose any posts to federate, which should improve federation across Lemmy.

In terms of financials, I’ve tried to keep it at 12 months in the bank at any one time, in case of fluctuations (or situations such as this one!), so the overall goal would be around £375.

Your goal would be £5 for 75 people. For £40 a month it would just be 8 people at £5 a month.

My point is that if we want to have more financial stability we should have fundraising goals and events. You should keep track of how much money is raised in a time frame and have a set goal that we should try to meet.

I also don’t see a easy way to donate on the front page which is where I would be most likely to give. It might be worth putting a donate button somewhere on the site.

Can you add the donation link in the comment?

Whoops, forgot to add to the post!

Very nice.

Thanks for continuing to host this wonderful instance!

Why does the database get killed when it maxes out RAM? A database engine will always aim to max out RAM.

There is a difference between data that got kept in ram for faster access, that can be freed every time. And data that can’t be freed, because the system need to hold it for certain thing. This here is for sure not intended, but seems the database system can’t freed the memory that easily. I just want to make clear it’s not as easy to say, a database engine aim to max it, so it should be able to handle it.